Google AI Overviews cite YouTube more than any medical site for health queries, study suggests

Google’s search feature AI Overviews cites YouTube more than any medical website when answering queries about health conditions, according to research that raises fresh questions about a tool seen by 2 billion people each month.The company has said its AI summaries, which appear at the top of search results and use generative AI to answer questions from users, are “reliable” and cite reputable medical sources such as the Centers for Disease Control and Prevention and the Mayo Clinic.However, a study that analysed responses to more than 50,000 health queries, captured using Google searches from Berlin, found the top cited source was YouTube.The video-sharing platform is the world’s second most visited website, after Google itself, and is owned by Google.Researchers at SE Ranking, a search engine optimisation platform, found YouTube made up 4.

43% of all AI Overview citations,No hospital network, government health portal, medical association or academic institution came close to that number, they said,“This matters because YouTube is not a medical publisher,” the researchers wrote,“It is a general-purpose video platform,Anyone can upload content there (eg board-certified physicians, hospital channels, but also wellness influencers, life coaches, and creators with no medical training at all).

”Google told the Guardian that AI Overviews was designed to surface high-quality content from reputable sources, regardless of format, and a variety of credible health authorities and licensed medical professionals created content on YouTube.The study’s findings could not be extrapolated to other regions as it was conducted using German-language queries in Germany, it said.The research comes after a Guardian investigation found people were being put at risk of harm by false and misleading health information in Google AI Overviews responses.In one case that experts said was “dangerous” and “alarming”, Google provided bogus information about crucial liver function tests that could have left people with serious liver disease wrongly thinking they were healthy.The company later removed AI Overviews for some but not all medical searches.

The SE Ranking study analysed 50,807 healthcare-related prompts and keywords to see which sources AI Overviews relied on when generating answers.They chose Germany because its healthcare system is strictly regulated by a mix of German and EU directives, standards and safety regulations.“If AI systems rely heavily on non-medical or non-authoritative sources even in such an environment, it suggests the issue may extend beyond any single country,” they wrote.AI Overviews surfaced on more than 82% of health searches, the researchers said.When they looked at which sources AI Overviews relied on most often for health-related answers, one result stood out immediately, they said.

The single most cited domain was YouTube with 20,621 citations out of a total of 465,823.The next most cited source was NDR.de, with 14,158 citations (3.04%).The German public broadcaster produces health-related content alongside news, documentaries and entertainment.

In third place was a medical reference site, Msdmanuals.com with 9,711 citations (2.08%).The fourth most cited source was Germany’s largest consumer health portal, Netdoktor.de, with 7,519 citations (1.

61%).The fifth most cited source was a career platform for doctors, Praktischarzt.de, with 7,145 citations (1.53%).The researchers acknowledged limitations to their study.

It was conducted as a one-time snapshot in December 2025, using German-language queries that reflected how users in Germany typically search for health information.Results could vary over time, by region, and by the phrasing of questions.However, even with those caveats, the findings still prompted alarm.Hannah van Kolfschooten, a researcher specialising in AI, health and law at the University of Basel who was not involved with the research, said: “This study provides empirical evidence that the risks posed by AI Overviews for health are structural, not anecdotal.It becomes difficult for Google to argue that misleading or harmful health outputs are rare cases.

“Instead, the findings show that these risks are embedded in the way AI Overviews are designed.In particular, the heavy reliance on YouTube rather than on public health authorities or medical institutions suggests that visibility and popularity, rather than medical reliability, is the central driver for health knowledge.”A Google spokesperson said: “The implication that AI Overviews provide unreliable information is refuted by the report’s own data, which shows that the most cited domains in AI Overviews are reputable websites.And from what we’ve seen in the published findings, AI Overviews cite expert YouTube content from hospitals and clinics.”Google said the study showed that of the 25 most cited YouTube videos, 96% were from medical channels.

However, the researchers cautioned that these videos represented fewer than 1% of all the YouTube links cited by AI Overviews on health.“Most of them (24 out of 25) come from medical-related channels like hospitals, clinics and health organisations,” the researchers wrote.“On top of that, 21 of the 25 videos clearly note that the content was created by a licensed or trusted source.“So at first glance it looks pretty reassuring.But it’s important to remember that these 25 videos are just a tiny slice (less than 1% of all YouTube links AI Overviews actually cite).

With the rest of the videos, the situation could be very different.”The best public interest journalism relies on first-hand accounts from people in the know.If you have something to share on this subject, you can contact us confidentially using the following methods.Secure Messaging in the Guardian appThe Guardian app has a tool to send tips about stories.Messages are end to end encrypted and concealed within the routine activity that every Guardian mobile app performs.

This prevents an observer from knowing that you are communicating with us at all, let alone what is being said.If you don't already have the Guardian app, download it (iOS/Android) and go to the menu.Select ‘Secure Messaging’.SecureDrop, instant messengers, email, telephone and postIf you can safely use the Tor network without being observed or monitored, you can send messages and documents to the Guardian via our SecureDrop platform.Finally, our guide at theguardian.

com/tips lists several ways to contact us securely, and discusses the pros and cons of each,

Latest ChatGPT model uses Elon Musk’s Grokipedia as source, tests reveal

The latest model of ChatGPT has begun to cite Elon Musk’s Grokipedia as a source on a wide range of queries, including on Iranian conglomerates and Holocaust deniers, raising concerns about misinformation on the platform.In tests done by the Guardian, GPT-5.2 cited Grokipedia nine times in response to more than a dozen different questions. These included queries on political structures in Iran, such as salaries of the Basij paramilitary force and the ownership of the Mostazafan Foundation, and questions on the biography of Sir Richard Evans, a British historian and expert witness against Holocaust denier David Irving in his libel trial.Grokipedia, launched in October, is an AI-generated online encyclopedia that aims to compete with Wikipedia, and which has been criticised for propagating rightwing narratives on topics including gay marriage and the 6 January insurrection in the US

Young will suffer most when AI ‘tsunami’ hits jobs, says head of IMF

Artificial intelligence will be a “tsunami hitting the labour market”, with young people worst affected, the head of the International Monetary Fund warned the World Economic Forum on Friday.Kristalina Georgieva told delegates in Davos that the IMF’s own research suggested there would be a big transformation of demand for skills, as the technology becomes increasingly widespread.“We expect over the next years, in advanced economies, 60% of jobs to be affected by AI, either enhanced or eliminated or transformed – 40% globally,” she said. “This is like a tsunami hitting the labour market.”She suggested that in advanced economies, one in 10 jobs had already been “enhanced” by AI, tending to boost these workers’ pay, with knock-on benefits for the local economy

TikTok announces it has finalized deal to establish US entity, sidestepping ban

TikTok announced on Thursday it had closed a deal to establish a new US entity, allowing it to sidestep a ban and ending a long legal battle.The deal finalized by ByteDance, TikTok’s Chinese owner, sets up a majority American-owned venture, with investors including Larry Ellison’s Oracle, the private-equity group Silver Lake and Abu Dhabi’s MGX owning 80.1% of the new entity, while ByteDance will own 19.9%.The announcement comes five years after Donald Trump first threatened to ban the popular platform in the US during his first term

Campaigner launches £1.5bn legal action in UK against Apple over wallet’s ‘hidden fees’

The financial campaigner James Daley has launched a £1.5bn class action lawsuit against Apple over its mobile phone wallet, claiming the US tech company blocked competition and charged hidden fees that ultimately harmed 50 million UK consumers.The lawsuit takes aim at Apple Pay, which they say has been the only contactless payment service available for iPhone users in Britain over the past decade.Daley, who is the founder of the advocacy group Fairer Finance, claims this situation amounted to anti-competitive behaviour and allowed Apple to charge hidden fees, ultimately pushing up costs for banks that passed charges on to consumers, regardless of whether they owned an iPhone.It is the first UK legal challenge to the company’s conduct in relation to Apple Pay, and takes place months after regulators like the Competition and Markets Authority and the Payments Systems Regulator began scrutinising the tech industry’s digital wallet services

Former FTX crypto executive Caroline Ellison released from federal custody

The Disgraced former cryptocurrency executive Caroline Ellison has been released from federal custody after serving about 14 months for her involvement in the multibillion-dollar FTX fraud scandal. Ellison was previously head of FTX’s associated trading arm and the on-again, off-again romantic partner of the crypto exchange’s founder, Sam Bankman-Fried.Ellison, 31, was sentenced to 24 months in prison in 2024 after pleading guilty to seven charges, including wire fraud and money laundering. She featured prominently as a witness for the prosecution of Bankman-Fried, testifying that her former paramour directed her to commit crimes. Bankman-Fried was sentenced to 25 years in prison

Experts warn of threat to democracy from ‘AI bot swarms’ infesting social media

Political leaders could soon launch swarms of human-imitating AI agents to reshape public opinion in a way that threatens to undermine democracy, a high profile group of experts in AI and online misinformation has warned.The Nobel peace prize-winning free-speech activist Maria Ressa, and leading AI and social science researchers from Berkeley, Harvard, Oxford, Cambridge and Yale are among a global consortium flagging the new “disruptive threat” posed by hard-to-detect, malicious “AI swarms” infesting social media and messaging channels.A would-be autocrat could use such swarms to persuade populations to accept cancelled elections or overturn results, they said, amid predictions the technology could be deployed at scale by the time of the US presidential election in 2028.The warnings, published today in Science, come alongside calls for coordinated global action to counter the risk, including “swarm scanners” and watermarked content to counter AI-run misinformation campaigns. Early versions of AI-powered influence operations have been used in the 2024 elections in Taiwan, India and Indonesia

The ADHD grey zone: why patients are stuck between private diagnosis and NHS care

Seeing red over the Greens’ advocacy of ‘buy the supply’ housing policy | Letters

Great Ormond Street hospital cleaners win racial discrimination appeal

ADHD waiting lists ‘clogged by patients returning from private care to NHS’

Rural and coastal areas of England to get more cancer doctors

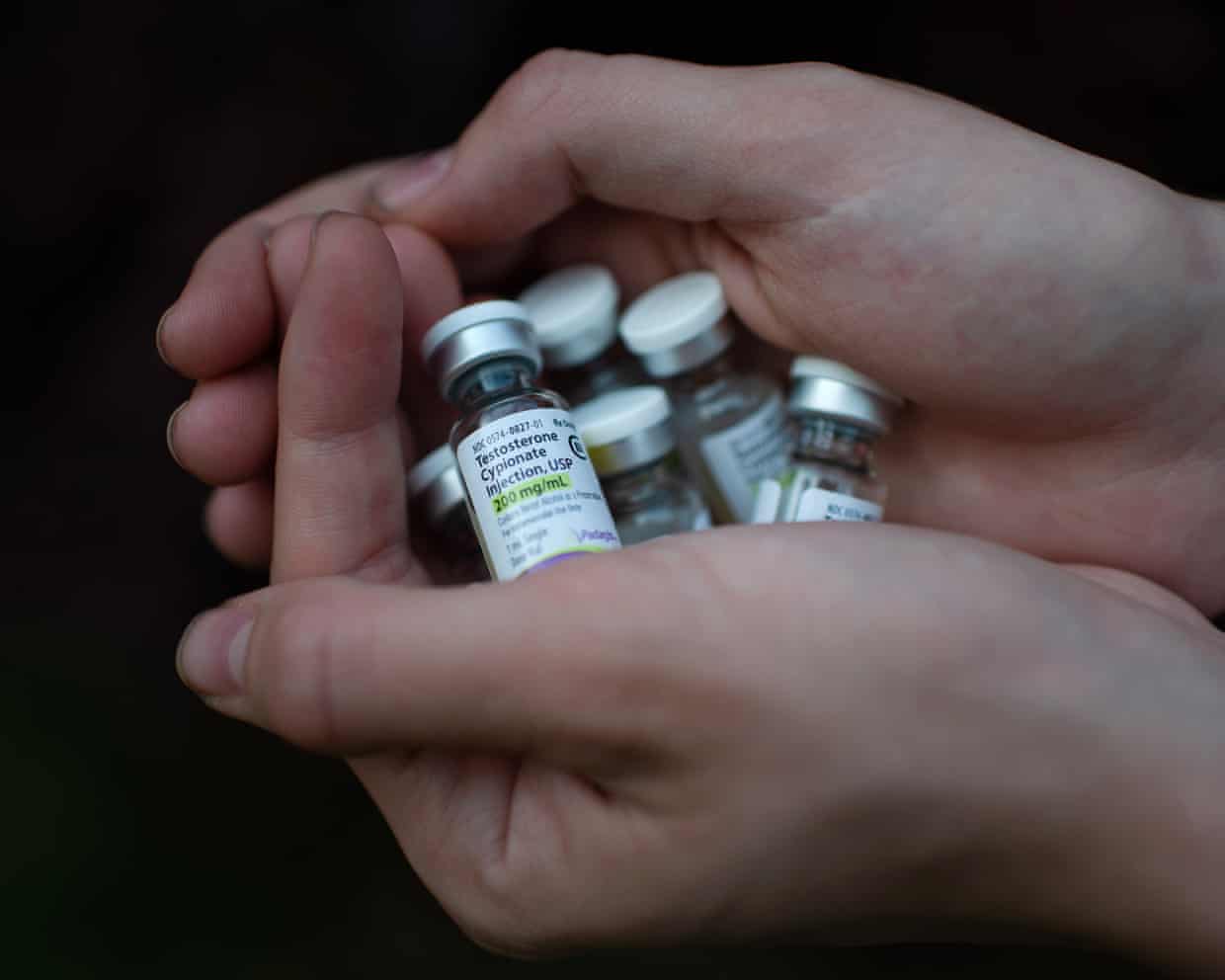

‘Manosphere’ influencers pushing testosterone tests are convincing healthy young men there is something wrong with them, study finds