How the ‘confident authority’ of Google AI Overviews is putting public health at risk

Do I have the flu or Covid? Why do I wake up feeling tired? What is causing the pain in my chest? For more than two decades, typing medical questions into the world’s most popular search engine has served up a list of links to websites with the answers.Google those health queries today and the response will likely be written by artificial intelligence.Sundar Pichai, Google’s chief executive, first set out the company’s plans to enmesh AI into its search engine at its annual conference in Mountain View, California, in May 2024.Starting that month, he said, US users would see a new feature, AI Overviews, which would provide information summaries above traditional search results.The change marked the biggest shake-up of Google’s core product in a quarter of a century.

By July 2025, the technology had expanded to more than 200 countries in 40 languages, with 2 billion people served AI Overviews each month.With the rapid rollout of AI Overviews, Google is racing to protect its traditional search business, which generates about $200bn (£147bn) a year, before upstart AI rivals can derail it.“We are leading at the frontier of AI and shipping at an incredible pace,” Pichai said last July.AI Overviews in particular were “performing well”, he added.But overviews carry risks, experts say.

They use generative AI to provide snapshots of information about a topic or question, adding conversational answers above the traditional search results in the blink of an eye.They can cite sources, but do not necessarily know when that source is incorrect.Within weeks of the feature launching in the US, users encountered untruths across a range of subjects.One AI Overview said Andrew Jackson, the seventh US president, graduated from college in 2005.Elizabeth Reid, Google’s head of search, responded to criticism in a blog post.

She conceded that “in a small number of cases”, AI Overviews had misinterpreted language on web pages and presented inaccurate information.“At the scale of the web, with billions of queries coming in every day, there are bound to be some oddities and errors,” she wrote.But when those questions are about health, accuracy and context are essential and non-negotiable, experts say.Google is facing mounting scrutiny of its AI Overviews for medical queries after a Guardian investigation found people were being put at risk of harm by false and misleading health information.The company says AI Overviews are “reliable”.

But the Guardian found some medical summaries served up inaccurate health information and put people at risk of harm.In one case, which experts said was “really dangerous”, Google wrongly advised people with pancreatic cancer to avoid high-fat foods.Experts said this was the exact opposite of what should be recommended, and may increase the risk of patients dying from the disease.In another “alarming” example, the company provided bogus information about crucial liver function tests, which could leave people who had serious liver disease wrongly thinking they were healthy.What AI Overviews said was normal could vary drastically from what was actually considered normal, experts said.

The summaries could lead to seriously ill patients wrongly thinking they had a normal test result and not bothering to attend follow-up appointments.AI Overviews about women’s cancer tests also provided “completely wrong” information, which experts said could result in people dismissing genuine symptoms.Google initially sought to downplay the Guardian’s findings.From what its own clinicians could assess, the company said, the AI Overviews that alarmed experts linked to reputable sources and recommended seeking expert advice.“We invest significantly in the quality of AI Overviews, particularly for topics like health, and the vast majority provide accurate information,” a spokesperson said.

Within days, however, the company removed some of the AI Overviews for health queries flagged by the Guardian,“We do not comment on individual removals within search,” a spokesperson said,“In cases where AI Overviews miss some context, we work to make broad improvements, and we also take action under our policies where appropriate,”While experts welcomed the removal of some AI summaries for health queries, many remain worried,“Our bigger concern with all this is that it is nit-picking a single search result and Google can just shut off the AI Overviews for that but it’s not tackling the bigger issue of AI Overviews for health,” says Vanessa Hebditch, the director of communications and policy at the British Liver Trust, a liver health charity.

“There are still too many examples out there of Google AI Overviews giving people inaccurate health information,” adds Sue Farrington, the chair of the Patient Information Forum, which promotes evidence-based health information to patients, the public and healthcare professionals.A new study has prompted more concerns.When researchers analysed the responses to more than 50,000 health-related searches in Germany to see which sources AI Overviews rely on most, one result stood out immediately.The single most cited domain was YouTube.“This matters because YouTube is not a medical publisher,” the researchers wrote.

“It is a general-purpose video platform.Anyone can upload content there (eg, board-certified physicians, hospital channels, but also wellness influencers, life coaches and creators with no medical training at all).”In medicine, it is not only where answers come from that matter, or their level of accuracy, but how they are presented to users, experts say.“With AI Overviews, users no longer encounter a range of sources that they can compare and critically assess,” says Hannah van Kolfschooten, a researcher in AI, health and law at the University of Basel.“Instead, they are presented with a single, confident, AI-generated answer that exhibits medical authority.

“This means that the system does not merely reflect health information online, but actively restructures it.When that response is built on sources never designed to meet medical standards, such as YouTube videos, this creates a new form of unregulated medical authority online.”Google says AI Overviews are built to surface information backed up by top web results, and include links to web content that supports the information presented in the summary.People can use these links to dig deeper on a topic, the company told the Guardian.But the single blocks of text in AI Overviews, combining health information from multiple sources, can cause confusion, says Nicole Gross, an associate professor in business and society at the National College of Ireland.

“Once the AI summary appears, users are much less likely to research further, which means that they are deprived of the opportunity to critically evaluate and compare information, or even deploy their common sense when it comes to health-related issues.”Experts have raised other concerns with the Guardian.Even if and when AI Overviews do provide accurate facts about a specific medical topic, they may not distinguish between strong evidence from randomised trials and weaker evidence from observational studies, they say.Some also miss important caveats about that evidence, they add.Having such claims listed next to one another in an AI Overview may also give the impression that some are better established than they really are.

Answers can also change as AI Overviews evolve, even when the science hasn’t shifted.“That means that people are getting a different answer depending on when they search, and that’s not good enough,” says Athena Lamnisos, the chief executive of the Eve Appeal cancer charity.Google told the Guardian that links included in AI Overviews were dynamic and changed based on the information that was most relevant, helpful and timely for a given search.If AI Overviews misinterpreted web content or missed some context, the company would use these errors to improve its systems, and also take action when appropriate, it said.The biggest worry is that bogus and dangerous medical information or advice in AI Overviews “ends up getting translated into the everyday practices, routines and life of a patient, even in adapted forms”, says Gross.

“In healthcare, this can turn into a matter of life and death.”

R&B star Jill Scott: ‘I like mystery – I love Sade but I don’t know what she had for breakfast’

The neo-soul singer and actor answers your questions on being taken to a go-go club as a child, training as an English teacher and getting mistaken for footballer Jill ScottIn a recent interview you gave an invaluable life lesson which involved a go-go bar and your mother’s love. What are your tips for living life between adversities? Integrity411My mother’s ex-husband was a questionable man and after he picked me up from elementary school he used to take me to a go-go bar where ladies were dancing in their panties. I was a child, so I thought: how nice for them, I hate getting dressed too! They dance all day and then some nice people put money in their panties. The ladies would give me milk or Coca-Cola and give me a dollar, so I wanted to be a go-go dancer when I grew up. At that age I didn’t know there was anything wrong with me going there and I learned not to judge people so quickly

Letter: Colin Ford obituary

Colin Ford was a most supportive critic. In 2006 I was invited to speak about Virginia Woolf and Photography at the Women’s Library in London. Part of my paper was about Julia Margaret Cameron, Woolf’s great aunt, and her many influences on Woolf’s writing and photography.Already then the world expert on Cameron, Colin was in the audience, having trekked all the way to Whitechapel on a wet weekday evening. Terrified that I might misconstrue or misrepresent Cameron in front of him, I fumbled the slide projector

Museums must reach all parts of UK, says Nandy as £1.5bn of arts funding announced

London-based museums need to ensure they reach every part of the country, according to Lisa Nandy, the culture secretary, who on Wednesday announced a landmark £1.5bn funding package for the arts meant to restore national pride.National museums including the British Museum and the National Portrait Gallery will be handed a £600m package but the culture secretary has urged them to look outside the capital to extend their sphere of influence.“Almost all of our national institutions are based in London, which means they need to work harder to make sure that they are genuinely national institutions [by] opening opportunities for young people from every part of our country,” she said.Nandy praised the outreach work of the Royal Shakespeare Company as an example of how national institutions could engage visitors across the country

Stephen Colbert on Trump’s first year back: ‘Today’s maniacal criminality distracts us from yesterday’s maniac crimes’

Late-night hosts acknowledged one full, maniacal year of Donald Trump’s second term as president of the United States.Tuesday 20 January, marked one full year of Trump’s second presidency, and “during that time, he has monopolized our attention every second of every minute of every hour of every day,” said Stephen Colbert on The Late Show. “Which is sad. Because today we’re not focusing on the real meaning of January 20: it’s Penguin Awareness Day.”On a more serious note, “a lot has happened in a short time”, the host noted

‘We played to 8,000 Mexicans who knew every word’: how the Whitest Boy Alive conquered the world

He lit up Europe with bands ranging from Peachfuzz to Kings of Convenience. But it was the Whitest Boy Alive that sent Erlend Øye stratospheric. As they return, the soft-singing, country-hopping sensation looks backIf you were to imagine the recent evolution of music in Europe as a series of scenes from a Where’s Wally?-style puzzle book, one bespectacled, lanky figure would pop up on almost every page. There he is in mid-90s London, handing out flyers for his first band Peachfuzz. Here he is in NME at the dawn of the new millennium, fronting folk duo Kings of Convenience and spearheading the new acoustic movement

Sally Tallant appointed as new director of London’s Hayward Gallery

Sally Tallant, the former boss of the Liverpool Biennial, has been announced as the new director of the Hayward Gallery and visual arts at London’s Southbank Centre.Tallant, who is currently in charge of the Queens Museum in New York, will return to the UK to take over from Ralph Rugoff, who will step down after two decades in charge of the institution, which celebrates its 75th anniversary this year.The Leeds-born Tallant has been in the US since 2019 after an eight-year stint in charge of the Liverpool Biennial and more than a decade working at the Serpentine Gallery, where she was head of programmes until 2011.She said she was delighted to be returning to London and excited to build on the “outstanding legacy” of Rugoff, who also took charge of the Venice Biennale in 2019. She said she was looking forward to “shaping the next chapter of this vital cultural destination and civic institution”

Great Ormond Street hospital cleaners win racial discrimination appeal

ADHD waiting lists ‘clogged by patients returning from private care to NHS’

Rural and coastal areas of England to get more cancer doctors

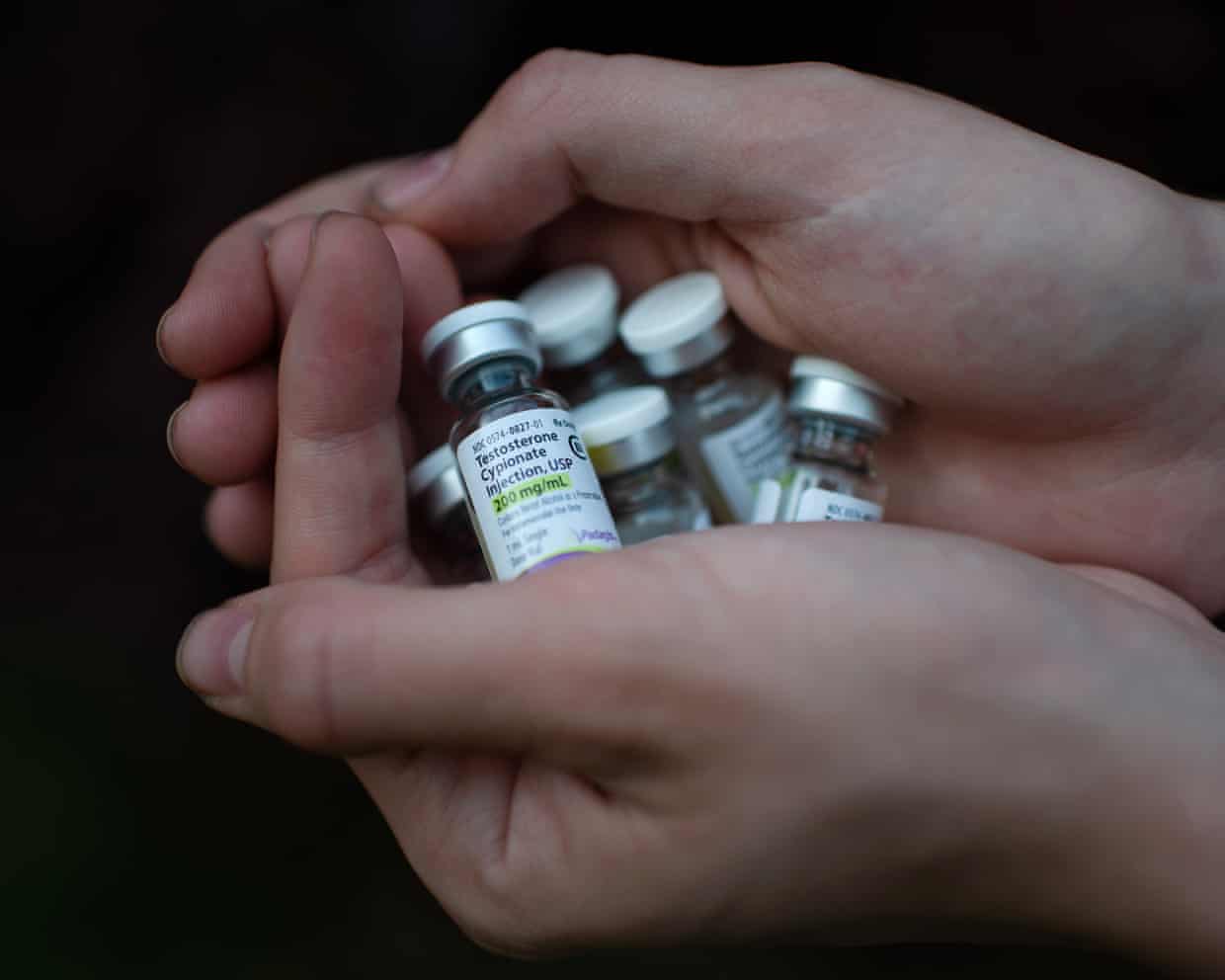

‘Manosphere’ influencers pushing testosterone tests are convincing healthy young men there is something wrong with them, study finds

John Knight obituary

Assisted dying bill backers say it is ‘near impossible’ it will pass House of Lords