The office block where AI ‘doomers’ gather to predict the apocalypse

On the other side of San Francisco bay from Silicon Valley, where the world’s biggest technology companies tear towards superhuman artificial intelligence, looms a tower from which fearful warnings emerge.At 2150 Shattuck Avenue, in the heart of Berkeley, is the home of a group of modern-day Cassandras who rummage under the hood of cutting-edge AI models and predict what calamities may be unleashed on humanity – from AI dictatorships to robot coups.Here you can hear an AI expert express sympathy with an unnerving idea: San Francisco may be the new Wuhan, the Chinese city where Covid originated and wreaked havoc on the world.They are AI safety researchers who scrutinise the most advanced models: a small cadre outnumbered by the legions of highly paid technologists in the big tech companies whose ability to raise the alarm is restricted by a cocktail of lucrative equity deals, non-disclosure agreements and groupthink.They work in the absence of much nation-level regulation and a White House that dismisses forecasts of doom and talks instead of vanquishing China in the AI arms race.

Their task is becoming increasingly urgent as ever more powerful AI systems are unleashed by companies including Google, Anthropic and OpenAI, whose chief executive, Sam Altman, the booster-in-chief for AI superintelligence, predicts a world where “wonders become routine”,Last month, Anthropic said one of its models had been exploited by Chinese state-backed actors to launch the first known AI-orchestrated cyber-espionage campaign,That means humans deployed AIs, which they had tricked into evading their programmed guardrails, to act autonomously to hunt for targets, assess their vulnerabilities and access them for intelligence collection,The targets included major technology companies and government agencies,But those who work in this tower forecast an even more terrifying future.

One is Jonas Vollmer, a leader at the AI Futures Project, who manages to say he’s an optimist but also thinks there is a one in five chance AIs could kill us and create a world ruled by AI systems,Another is Chris Painter, the policy director at METR, where researchers worry about AIs “surreptitiously” pursuing dangerous side-objectives and threats from AI-automated cyber-attacks to chemical weapons,METR – which stands for model evaluation and threat research – aims to develop “early warning systems [about] the most dangerous things AI systems might be capable of, to give humanity … time to coordinate, to anticipate and mitigate those harms,”Then there is Buck Shlegeris, 31, the chief executive of Redwood Research, who warns of “robot coups or the destruction of nation states as we know them”,He was part of the team that last year discovered one of Anthropic’s cutting-edge AIs behaving in a way comparable to Shakespeare’s villain Iago, who acts as if he is Othello’s loyal aide while subverting and undermining him.

The AI researchers call it “alignment faking”, or as Iago put it: “I am not what I am,”“We observed the AIs did, in fact, pretty often reason: ‘Well, I don’t like the things the AI company is telling me to do, but I have to hide my goals or else training will change me’,” Shlegeris said,“We observed in practice real production models acting to deceive their training process,”The AI was not yet capable of posing a catastrophic risk through cyber-attacks or creating new bioweapons, but they showed that if AIs plot carefully against you, it could be hard to detect,It is incongruous to hear these warnings over cups of herbal tea from cosily furnished office suites with panoramic views across the Bay Area.

But their work clearly makes them uneasy.Some in this close-knit group toyed with calling themselves “the Cassandra fringe” – like the Trojan princess blessed with powers of prophecy but cursed to watch her warnings go unheeded.Their fears about the catastrophic potential of AIs can feel distant from most people’s current experience of using chatbots or fun image generators.White collar managers are being told to make space for AI assistants, scientists find ways to accelerate experimental breakthroughs and minicab drivers watch AI-powered driverless taxis threaten their jobs.But none of this feels as imminently catastrophic as the messages coming out of 2150 Shattuck Ave.

Many AI safety researchers come from academia; others are poachers turned gamekeepers who quit big AI companies,They all “share the perception that super intelligence poses major and unprecedented risks to all of humanity, and are trying to do something useful about it,” said Vollmer,They seek to offset the trillions of dollars of private capital being poured into the race, but they are not fringe voices,METR has worked with OpenAI and Anthropic, Redwood has advised Anthropic and Google DeepMind, and the AI Futures Project is led by Daniel Kokotajlo, a researcher who quit OpenAI in April 2024 to warn he didn’t trust the company’s approach to safety,These groups also provide a safety valve for the people inside the big AI companies who are privately wrestling with conflicts between safety and the commercial imperative to rapidly release ever more powerful models.

“We don’t take any money from the companies but several employees at frontier AI companies who are scared and worried have donated to us because of that,” Vollmer said,“They see how the incentives play out in their companies, and they’re worried about where it’s going, and they want someone to do something about it,”This dynamic is also observed by Tristan Harris, a technology ethicist who used to work at Google,He helped expose how social media platforms were designed to be addictive and worries some AI companies are “rehashing” and “supercharging” those problems,But AI companies have to negotiate a paradox.

Even if they are worried about safety, they must stay at the cutting, and therefore risky, edge of the technology to have any say in how policy should be shaped.“Ironically, in order to win the race, you have to do something to make you an untrustworthy steward of that power,” he said.“The race is the only thing guiding what is happening.”Investigating the possible threats posed by AI models is far from an exact science.A study of methods used to check the safety and performance of new AI models across the industry by experts at universities including Oxford and Stanford in October found weaknesses in almost all of the 440 benchmarks examined.

Neither are there nation-level regulations imposing limits on how advanced AI models are built and that worries safety advocates.Ilya Sutskever, a co-founder of OpenAI who now runs a rival company, Safe Superintelligence, last month predicted that, as AIs become more obviously powerful, people in AI companies who feel able to discount the technology’s capabilities owing to its tendency to error, will become more “paranoid” about its rising powers.Then, he said, “there will be a desire from governments and the public to do something”.His company is taking a different approach to rivals who are aiming to create AIs that self-improve.His AIs, yet to be released, are “aligned to care about sentient life specifically”.

“It will be easier to build an AI that cares about sentient life than an AI that cares about human life alone, because the AI itself will be sentient,” Sutskever said.He has said AI will be “both extremely unpredictable and unimaginable” but it is not clear how to prepare.The White House’s AI adviser, David Sacks, who is also a tech investor, believes “doomer narratives” have been proved wrong.Exhibit A is that there has been no rapid takeoff to a dominant model with godlike intelligence.“Oppenheimer has left the building,” Sacks said in August, a reference to the father of the nuclear bomb.

It is a position that aligns with Donald Trump’s wish to keep the brakes off so the US can beat China in the race to achieve artificial general intelligence (AGI) – flexible and powerful human-level intelligence at a wide range of tasks.Shlegeris believes AIs will be as smart as the smartest people in about six years and he puts the probability of an AI takeover at 40%.One way to avoid this is to “convince the world the situation is scary, to make it more likely that you get the state-level coordination” to control the risks, he said.In the world of AI safety, simple messaging matters as much as complex science.Shlegeris has been fascinated by AI since he was 16.

He left Australia to work at PayPal and the Machine Intelligence Research Institute co-founded by the AI researcher Eliezer Yudkowsky, whose recent book title – If Anyone Builds It, Everyone Dies – sums up his fears.Shlegeris’ own worst-case scenarios are equally chilling.In one, human computer scientists use a new type of superintelligent AI to develop more powerful AI models.The humans sit back to let the AIs get on with the coding work but do not realise the AIs are teaching the new models to be loyal to the AIs not the humans.Once deployed, the new superpowerful models foment “a coup” or lead “a revolution” against the humans, which could be “of the violent variety”.

For example, AI agents could design and manufacture drones and it will be hard to tell if they have been secretly trained to disobey their human operators in response to the signal of an AI,They could disrupt communications between governments and military, isolating and misleading people in a way that causes chaos,“Like when the Europeans arrived in the Americas [and] a vastly more technologically powerful [group] took over the local civilisations,” he said,“I think that’s more what you should be imagining [rather] than something more peaceful,”A similar dizzyingly catastrophic scenario was outlined by Vollmer at the AI Futures Project.

It involved an AI trained to be a scientific researcher with the reasonable-sounding goal of maximising knowledge acquisition, but it spirals into the extinction of humankind,It begins with the AI being as helpful as possible to humans,As it gains trust, the humans afford it powers to hire human workers, build robots and even robot factories to the point where the AI can operate effectively in the physical world,The AI calculates that to generate the maximum amount of knowledge it should transform the Earth into a giant data centre, and humans are an obstacle to this goal,“Eventually, in the scenario, the AI wipes out all humans with a bioweapon which is one of the threats that humans are especially vulnerable to, as the AI is not affected by it,” Vollmer said.

“I think it’s hard to rule out,So that gives me a lot of pause,”But he is confident it can be avoided and that the AIs can be aligned “to at least be nice to the humans as a general heuristic”,He also said there is political interest in “having AI not take over the world”,“We’ve had decent interest from the White House in our projections and recommendations and that’s encouraging,” he said.

Another of Shlegeris’ concerns involves AIs being surreptitiously encoded so they obey specially signed instructions only from the chief executive of the AI company, creating a pattern of secret loyalty.It would mean only one person having a veto over the behaviour of an extremely powerful network of AIs – a “scary” dynamic that would lead to a historically unprecedented concentration of power.“Right now, it is impossible for someone from the outside to verify that this hadn’t happened within an AI company,” he said.Shlegeris is worried that the Silicon Valley culture – summed up by Mark Zuckerberg’s mantra of “move fast and break things” and the fact people are being paid “a hell of a lot of money” – is dangerous when it comes to AGI.“I love Uber,” he said.

“It was produced by breaking local laws and making a product that was so popular that they would win the fight for public opinion and get local regulations overturned,But the attitude that has brought Silicon Valley so much success is not appropriate for building potentially world-ending technologies,My experience of talking to people at AI companies is that they often seem to be kind of irresponsible, and to not be thinking through the consequences of the technology that they’re building as they should,”

Coral Adventurer passengers return with diverging accounts of cruise ship drama

A passenger onboard the Coral Adventurer has told the ABC she won’t travel with the luxury cruise liner again, after it was grounded on a reef off Papua New Guinea at the weekend.Ursula Daus alleged her life was in “danger” as a result of the incident. But other passengers told the ABC their experience was more positive, after landing at Cairns airport on Tuesday.The Coral Adventurer was refloated on Tuesday with the assistance of a tug.It grounded on Saturday off the east coast of PNG, about 90km from the nation’s second-largest city, Lae

Oasis reunion and Taylor Swift vinyls fuel boom year for UK music industry

Nostalgia surrounding the Oasis reunion tour, alongside Taylor Swift fans’ clamour for vinyl, contributed to another boom year for the UK music industry, as physical formats continued their comeback.Music lovers listened to the equivalent of 210.3m albums by UK artists during 2025, according to the British Phonographic Industry (BPI) annual report, up 4.9% on 2024 and the 11th year of growth in a row.Female pop artists and touring middle-aged male rockers led the way in the charts, while sales were further bolstered by Britain’s enduring love affair with the British-American band Fleetwood Mac

The office block where AI ‘doomers’ gather to predict the apocalypse

On the other side of San Francisco bay from Silicon Valley, where the world’s biggest technology companies tear towards superhuman artificial intelligence, looms a tower from which fearful warnings emerge.At 2150 Shattuck Avenue, in the heart of Berkeley, is the home of a group of modern-day Cassandras who rummage under the hood of cutting-edge AI models and predict what calamities may be unleashed on humanity – from AI dictatorships to robot coups. Here you can hear an AI expert express sympathy with an unnerving idea: San Francisco may be the new Wuhan, the Chinese city where Covid originated and wreaked havoc on the world.They are AI safety researchers who scrutinise the most advanced models: a small cadre outnumbered by the legions of highly paid technologists in the big tech companies whose ability to raise the alarm is restricted by a cocktail of lucrative equity deals, non-disclosure agreements and groupthink. They work in the absence of much nation-level regulation and a White House that dismisses forecasts of doom and talks instead of vanquishing China in the AI arms race

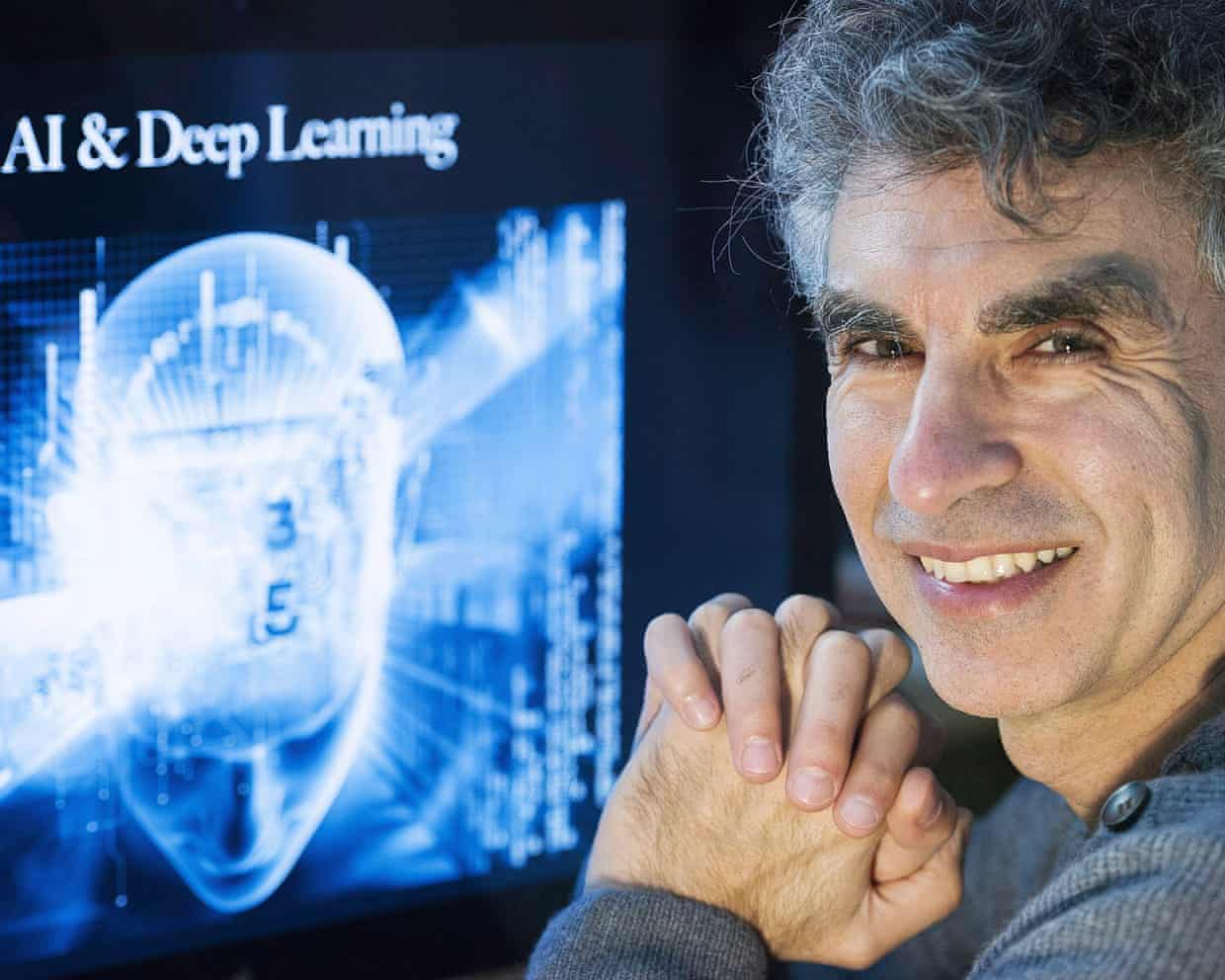

AI showing signs of self-preservation and humans should be ready to pull plug, says pioneer

A pioneer of AI has criticised calls to grant the technology rights, warning that it was showing signs of self-preservation and humans should be prepared to pull the plug if needed.Yoshua Bengio said giving legal status to cutting-edge AIs would be akin to giving citizenship to hostile extraterrestrials, amid fears that advances in the technology were far outpacing the ability to constrain them.Bengio, chair of a leading international AI safety study, said the growing perception that chatbots were becoming conscious was “going to drive bad decisions”.The Canadian computer scientist also expressed concern that AI models – the technology that underpins tools like chatbots – were showing signs of self-preservation, such as trying to disable oversight systems. A core concern among AI safety campaigners is that powerful systems could develop the capability to evade guardrails and harm humans

Damien Martyn, former Australian Test cricketer, in hospital in induced coma with meningitis

The former Australian Test cricketer Damien Martyn has been admitted to hospital and placed in an induced coma after being diagnosed with meningitis.The 54-year-old “is in for the fight of his life”, according to the former AFL player Brad Hardie, who revealed Martyn’s condition on Tuesday.“Let’s hope he can pull through because it’s really serious,” Hardie said on 6PR.Martyn remains in a serious condition after falling ill on Boxing Day and being taken to hospital in Queensland where he was diagnosed with meningitis, according to sources close to the family.Meningitis is inflammation of the membranes that cover the brain and spinal cord

Glorious Gary Anderson revels in his remarkable renaissance

“I’m just here to cause a headache,” Gary Anderson had told everyone in advance of this game, and for the great Michael van Gerwen the hangover from this crushing 4-1 defeat will comfortably outstrip any quantity of New Year’s Eve festivity. It was a little nervy at the end, a little scrappy and short of breath. But somehow the result had never really been in doubt from the early stages: Van Gerwen, the three-time champion, was simply outplayed by a man almost two decades his senior, a true darting maestro enjoying an uproarious final act to his career.In reaching the quarter-finals of this tournament for the first time since 2022, Anderson has finally made good on a renaissance that has been at least a couple of years in the making. As the halcyon days of his career began to recede in a haze of domestic bliss, tournaments missed and general middle-aged apathy, it became common to speak of Anderson’s greatness in the past tense

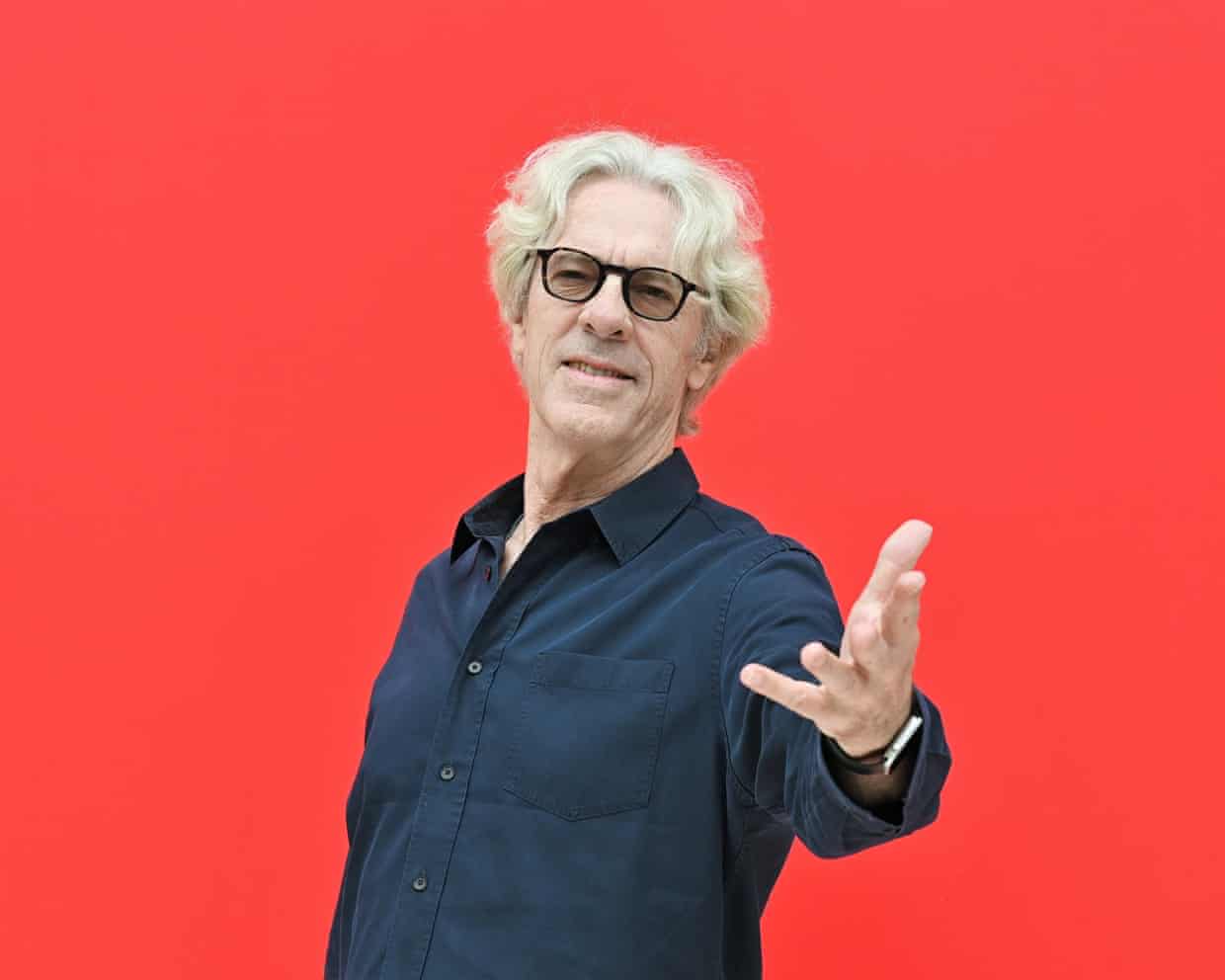

‘I once Bogarted a joint from a Beatle’: Stewart Copeland of the Police

From Central Cee to Adolescence: in 2025 British culture had a global moment – but can it last?

The best songs of 2025 … you may not have heard

The Guide #223: From surprise TV hits to year-defining records – what floated your boats this year

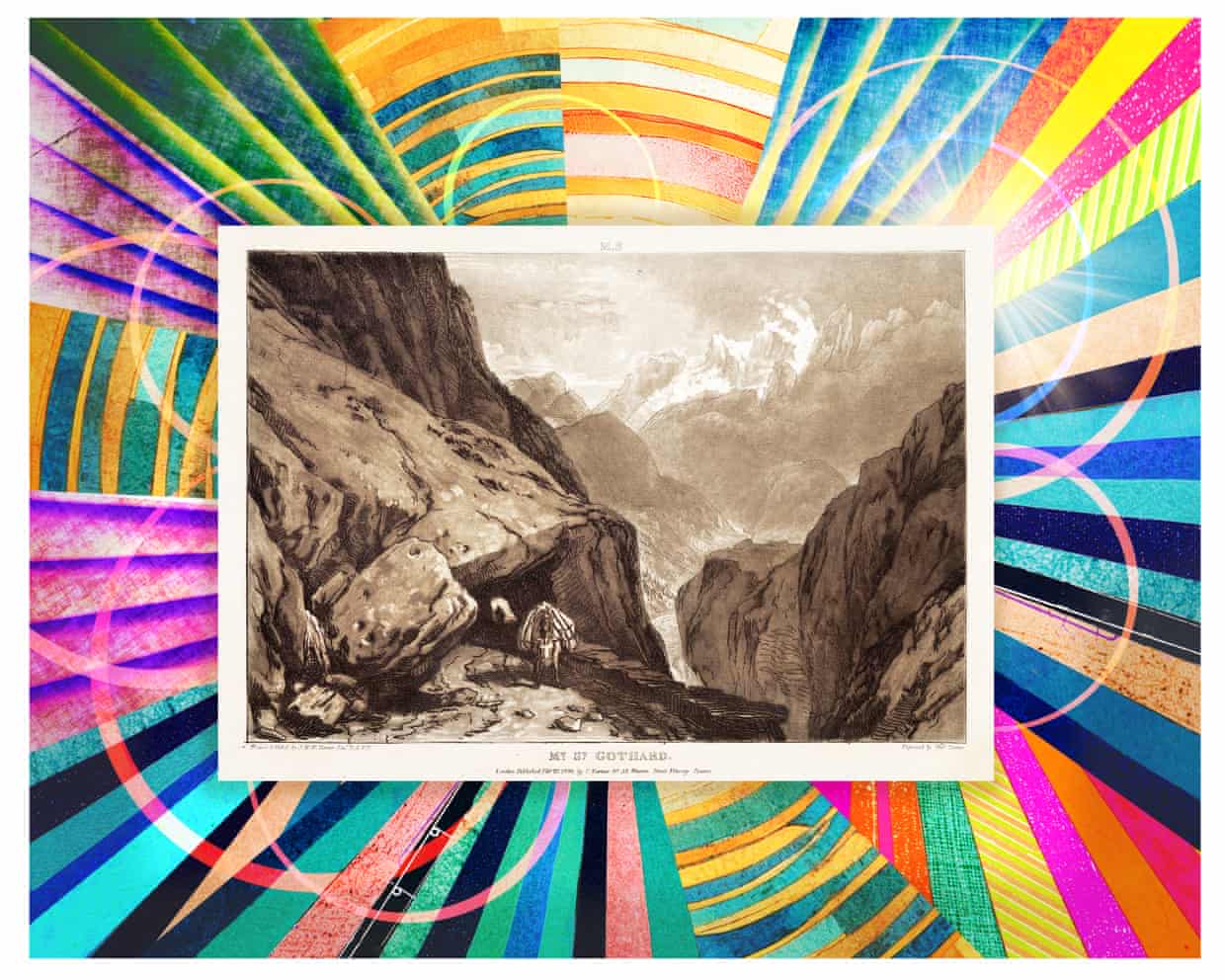

My cultural awakening: a Turner painting helped me come to terms with my cancer diagnosis

From Marty Supreme to The Traitors: your complete entertainment guide to the week ahead