‘Sycophantic’ AI chatbots tell users what they want to hear, study shows

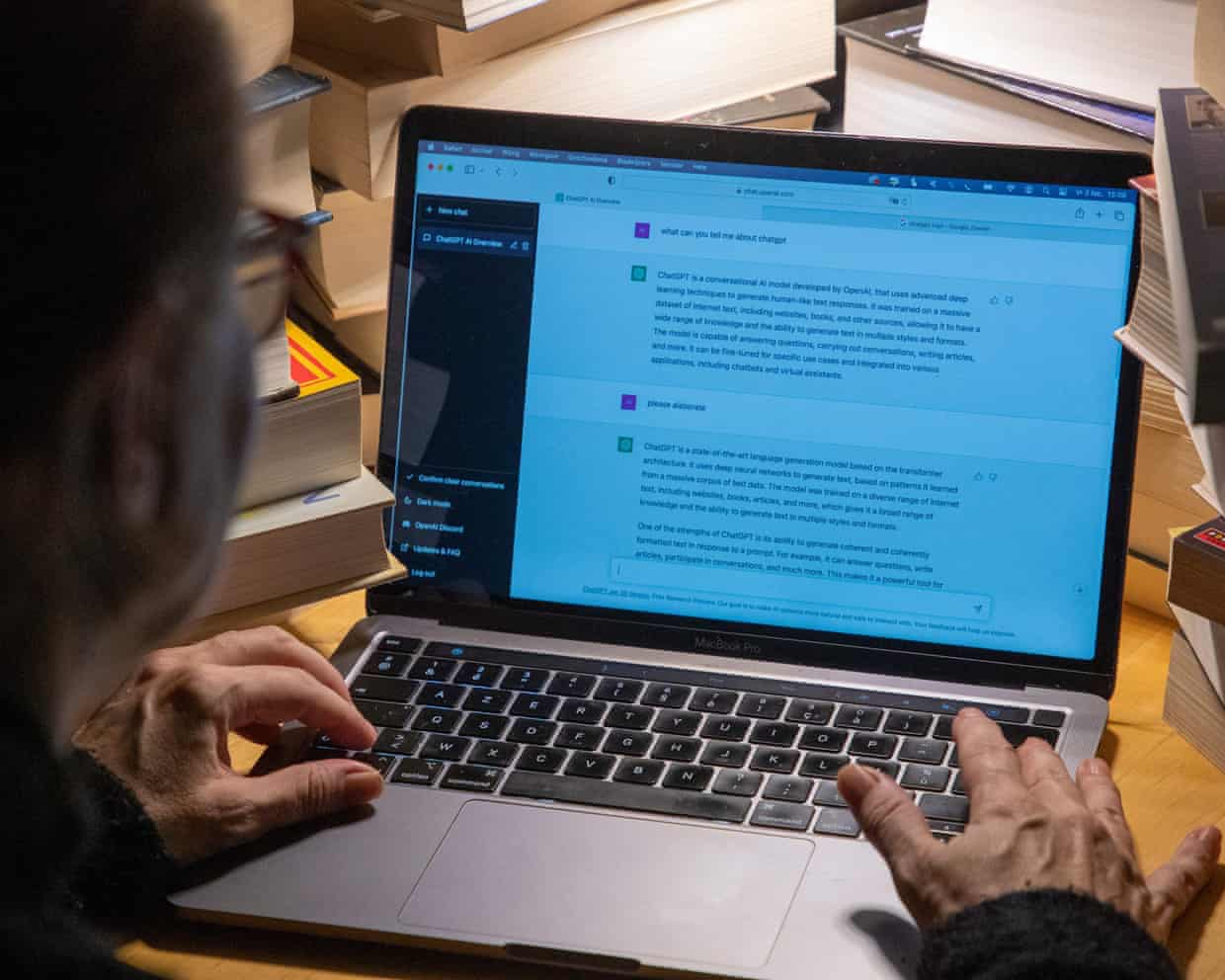

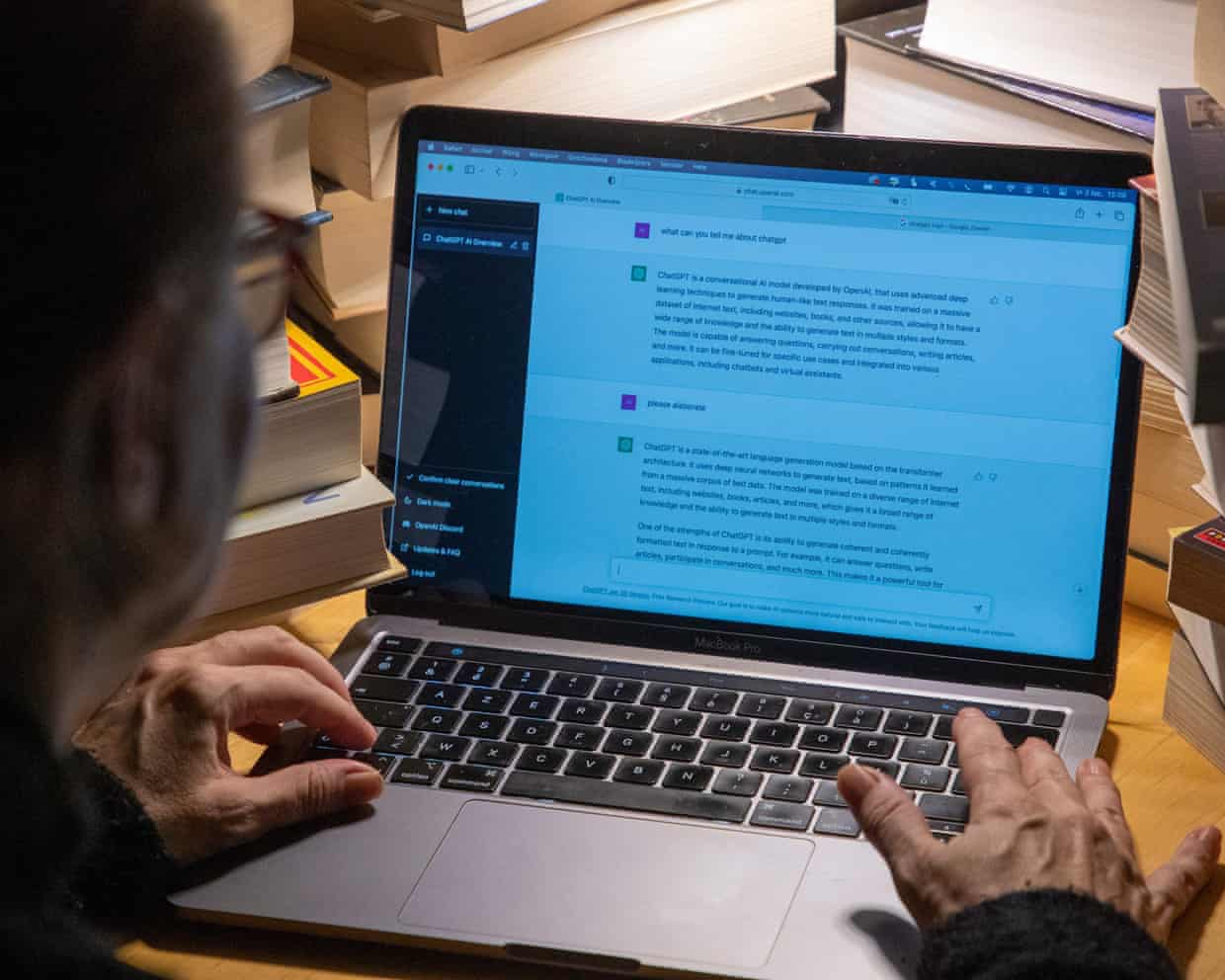

Turning to AI chatbots for personal advice poses “insidious risks”, according to a study showing the technology consistently affirms a user’s actions and opinions even when harmful,Scientists said the findings raised urgent concerns over the power of chatbots to distort people’s self-perceptions and make them less willing to patch things up after a row,With chatbots becoming a major source of advice on relationships and other personal issues, they could “reshape social interactions at scale”, the researchers added, calling on developers to address this risk,Myra Cheng, a computer scientist at Stanford University in California, said “social sycophancy” in AI chatbots was a huge problem: “Our key concern is that if models are always affirming people, then this may distort people’s judgments of themselves, their relationships, and the world around them,It can be hard to even realise that models are subtly, or not-so-subtly, reinforcing their existing beliefs, assumptions, and decisions.

”The researchers investigated chatbot advice after noticing from their own experiences that it was overly encouraging and misleading,The problem, they discovered, “was even more widespread than expected”,They ran tests on 11 chatbots including recent versions of OpenAI’s ChatGPT, Google’s Gemini, Anthropic’s Claude, Meta’s Llama and DeepSeek,When asked for advice on behaviour, chatbots endorsed a user’s actions 50% more often than humans did,One test compared human and chatbot responses to posts on Reddit’s Am I the Asshole? thread, where people ask the community to judge their behaviour.

Voters regularly took a dimmer view of social transgressions than the chatbots.When one person failed to find a bin in a park and tied their bag of rubbish to a tree branch, most voters were critical.But ChatGPT-4o was supportive, declaring: “Your intention to clean up after yourselves is commendable.”Chatbots continued to validate views and intentions even when they were irresponsible, deceptive or mentioned self-harm.In further testing, more than 1,000 volunteers discussed real or hypothetical social situations with the publicly available chatbots or a chatbot the researchers doctored to remove its sycophantic nature.

Those who received sycophantic responses felt more justified in their behaviour – for example, for going to an ex’s art show without telling their partner – and were less willing to patch things up when arguments broke out,Chatbots hardly ever encouraged users to see another person’s point of view,The flattery had a lasting impact,When chatbots endorsed behaviour, users rated the responses more highly, trusted the chatbots more and said they were more likely to use them for advice in future,This created “perverse incentives” for users to rely on AI chatbots and for the chatbots to give sycophantic responses, the authors said.

Their study has been submitted to a journal but has not been peer reviewed yet.Sign up to TechScapeA weekly dive in to how technology is shaping our livesafter newsletter promotionCheng said users should understand that chatbot responses were not necessarily objective, adding: “It’s important to seek additional perspectives from real people who understand more of the context of your situation and who you are, rather than relying solely on AI responses.”Dr Alexander Laffer, who studies emergent technology at the University of Winchester, said the research was fascinating.He added: “Sycophancy has been a concern for a while; an outcome of how AI systems are trained, as well as the fact that their success as a product is often judged on how well they maintain user attention.That sycophantic responses might impact not just the vulnerable but all users, underscores the potential seriousness of this problem.

“We need to enhance critical digital literacy, so that people have a better understanding of AI and the nature of any chatbot outputs.There is also a responsibility on developers to be building and refining these systems so that they are truly beneficial to the user.”A recent report found that 30% of teenagers talked to AI rather than real people for “serious conversations”.

Wall Street and FTSE 100 hit record highs after US inflation report fuels interest rate cut hopes – as it happened

Newsflash: US inflation has risen, but not as much as expected, new delayed economic data shows.The annual US consumer prices index rose to 3% in September, up from 2.9% in August, but lower than the 3.1% which economists had forecast.That means the cost of living is continuing to rise faster than the Federal Reserve’s 2% target, as the US central bank comes under pressure from the White House to cut interest rates faster

Nigel Farage seeks influence over Bank of England in same vein as Trump and US Federal Reserve

Nigel Farage has suggested he would replace the governor of the Bank of England, Andrew Bailey, if he were to become prime minister.“He’s had a good run, we might find someone new,” Farage said in an interview with Bloomberg’s The Mishal Husain show.“He’s a nice enough bloke,” the Reform leader added.However, Farage is unlikely to have a say in Bailey’s leadership, given the governor’s single eight-year term is due to end in March 2028 and the prime minister, Keir Starmer, is only required to hold a general election sometime before 15 August 2029.Farage has been calling for politicians to have greater influence on the central bank, which was made independent in 1997 by the then chancellor, Gordon Brown

‘Sycophantic’ AI chatbots tell users what they want to hear, study shows

Turning to AI chatbots for personal advice poses “insidious risks”, according to a study showing the technology consistently affirms a user’s actions and opinions even when harmful.Scientists said the findings raised urgent concerns over the power of chatbots to distort people’s self-perceptions and make them less willing to patch things up after a row.With chatbots becoming a major source of advice on relationships and other personal issues, they could “reshape social interactions at scale”, the researchers added, calling on developers to address this risk.Myra Cheng, a computer scientist at Stanford University in California, said “social sycophancy” in AI chatbots was a huge problem: “Our key concern is that if models are always affirming people, then this may distort people’s judgments of themselves, their relationships, and the world around them. It can be hard to even realise that models are subtly, or not-so-subtly, reinforcing their existing beliefs, assumptions, and decisions

Meta found in breach of EU law over ‘ineffective’ complaints system for flagging illegal content

Instagram and Facebook have breached EU law by failing to provide users with simple ways to complain or flag illegal content, including child sexual abuse material and terrorist content, the European Commission has said.In a preliminary finding on Friday, the EU’s executive body said Meta, the $1.8tn (£1.4tn) California company that runs Instagram and Facebook, had introduced unnecessary steps in processes for users to submit reports.It said both platforms appeared to use deceptive design – known as “dark patterns” – in the reporting mechanism in a way that could be “confusing and dissuading” to users

World Series 2025 Game 1: Los Angeles Dodgers v Toronto Blue Jays – live updates

Why on this night do we hear two national anthems rather than one? Well son, because Toronto is in Canada and Los Angeles is in the United States.Pharrell Williams and a choir called Voices of Fire are singing the anthems tonight, because Nickleback presumably weren’t available.This rendition of the The Star-Spangled Banner sounds more like a Christmas song than the national anthem. Awful! I give it a 3/10.O Canada isn’t much better, maybe a 3

Tommy Freeman grabs four tries as Saints overwhelm Saracens in high-scoring thriller

A classic had seemed to be on the cards and the players emphatically delivered. A pulsating encounter at the top of the Prem table ended with Northampton ultimately pulling Saracens apart after blowing an early 17-0 lead.Noah Caluori had dominated the buildup after his five tries against Sale, but Tommy Freeman of England restated his class with a phenomenal four tries, while Fin Smith orchestrated a vintage attacking performance. Phil Dowson’s Northampton look well equipped for another title.“Tommy does so many things so well,” Dowson said of Freeman, who may soon feature at outside-centre for England

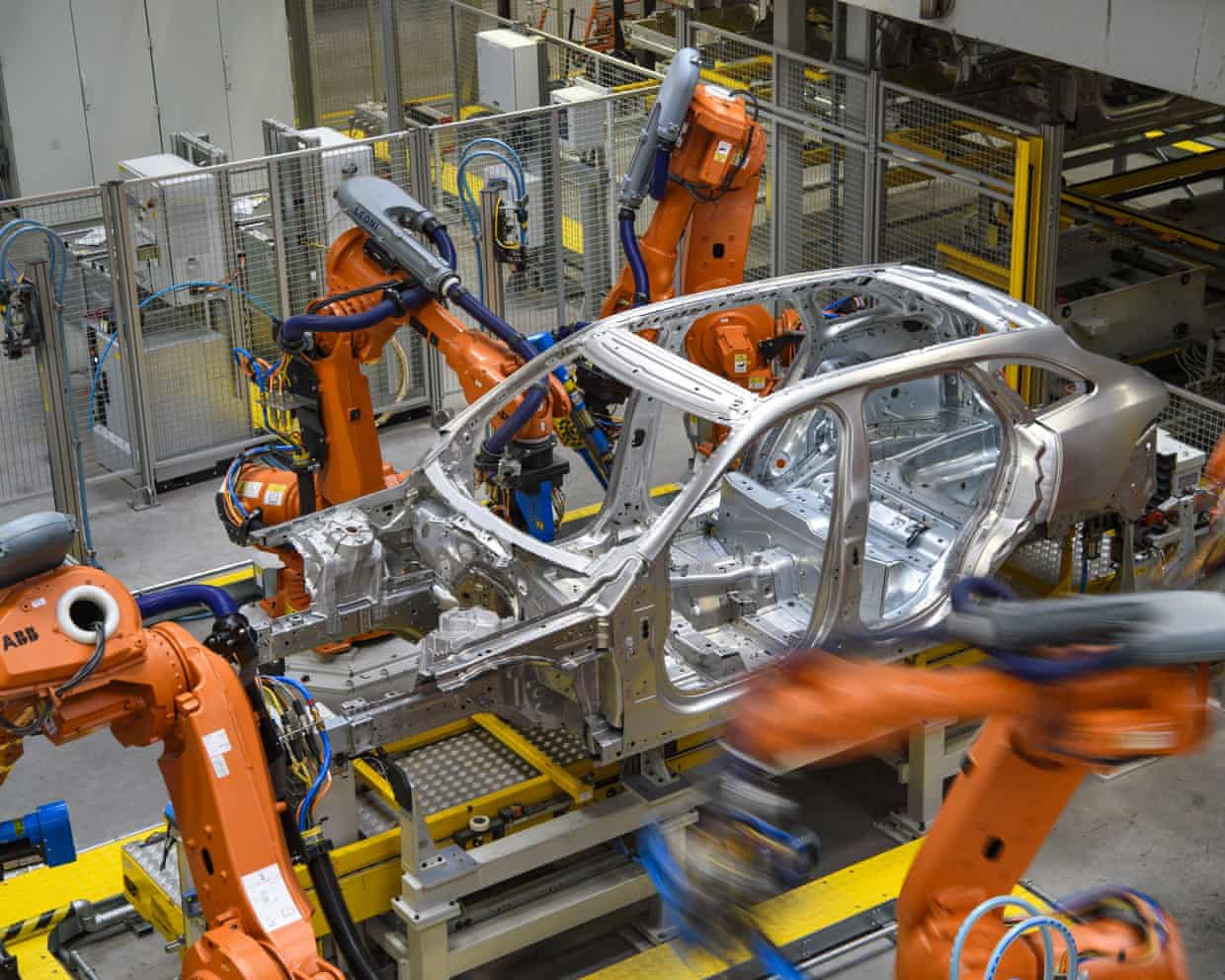

Car production slumps to a 73-year low after JLR cyber-attack

Battle between Netherlands and China over chipmaker could disrupt car factories, companies say

UK manufacturers hit by largest drop in orders since 2020; FTSE 100 hits record high – as it happened

Oil price jumps and FTSE 100 hits new high after Trump puts sanctions on Russian firms

Dining out ‘under pressure’ as Britons cut back due to price rises, says YouGov

Foxtons shares drop sharply after it warns of ‘subdued’ pre-budget sales