NEWS NOT FOUND

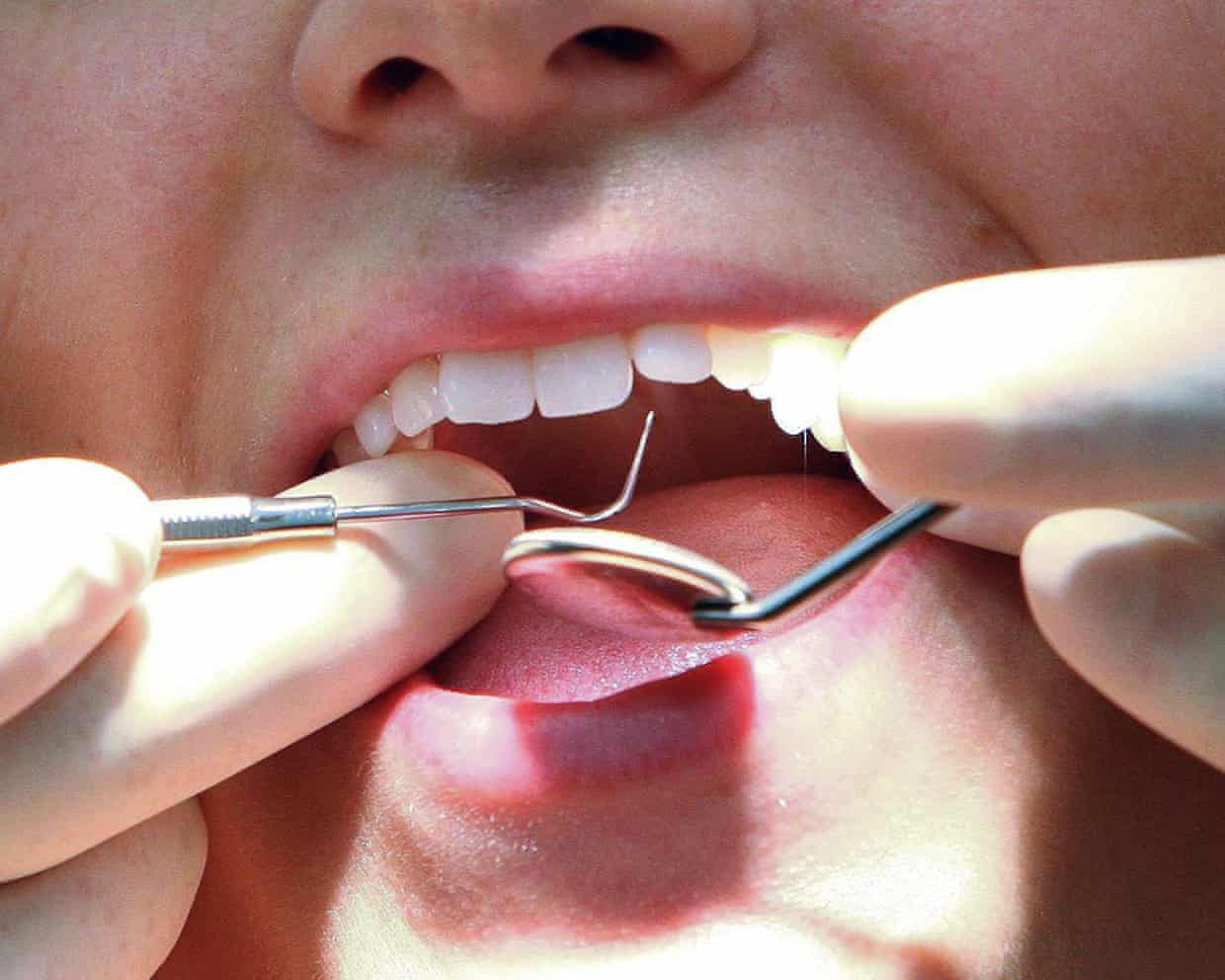

UK’s private dentistry market faces review after price jumps of more than 23%

The UK’s competition watchdog has launched a review into the £8bn private dentistry market after the price of a consultation increased by nearly 25% over a two-year period.One in five people in Great Britain sought private dental care in 2024 in part because they could not access NHS treatment. Announcing its investigation, the Competition and Markets Authority (CMA) said it wanted to make sure the market was “working well for UK consumers”.The CMA said dentistry played “a critical role in people’s health and wellbeing” and that demand for private services had risen sharply in recent years. Against this backdrop the regulator pointed to independent price data that showed average prices had “increased significantly”

‘A space of their own’: how cancer centres designed by top architects can offer hope

Maggie Keswick Jencks received her weekly breast cancer treatment in a windowless neon-lit room in Edinburgh’s Western general hospital. Her husband, the renowned landscape designer Charles, later described it as a kind of “architectural aversion therapy”.It was then, in the early 1990s, that the Scottish artist and garden designer imagined her own blueprint that would allow cancer patients “a space of their own” within the alienating, clinical confines of the hospital estate, one where they might “not lose the joy of living in the fear of dying”.The first Maggie’s Centre opened in Edinburgh in 1996, a year after her death, designed by Richard Murphy and housed in a converted stable block in the Western general grounds.Three decades on, there are more than 30 of these hospital-adjacent cancer support centres across the UK and overseas, and this legacy of conscious design is celebrated in a free exhibition at the V&A Dundee from Friday

UK government ‘effectively allowed’ child sexual abuse, campaigners say

Campaigners have accused the UK government of in effect allowing child abuse to continue by having an “inconsistent and arbitrary” approach to implementing recommendations from a seven-year statutory inquiry.The claim was made at the high court in London, where a judge said a legal action against the Home Office could continue.The Maggie Oliver Foundation is taking action over the government’s alleged failure to adopt all the changes recommended by the independent inquiry into child sexual abuse (IICSA), which conducted investigations between 2015 and 2022.At a hearing on Thursday, Mr Justice Kimblin allowed the legal action to continue, saying it was arguable that the foundation had a “legitimate expectation” that the government would implement the recommendations. The Home Office is defending the claim

Circumcision classed as potentially harmful practice in new CPS guidance

Circumcision has been classed as a potentially harmful practice in new official guidance for criminal prosecutors in England and Wales, but controversial plans to class it as possible child abuse have been dropped.The Crown Prosecution Service (CPS) decided against including circumcision alongside dowry abuse, witchcraft and female genital mutilation in its new guidance on honour-based abuse, after objections from Jewish and Muslim groups when the plans were revealed by the Guardian.Instead it has included a similar section on circumcision in updated guidance on offences against the person. It says: “In certain circumstances, such as the procedure being carried out by those falsely claiming to be suitably qualified practitioners or carried out in non-sterile conditions, it can cross the line into a harmful practice.”Prosecutors are advised to consider child cruelty offences under the Children and Young Persons Act 1933 or assault offences under the Offences against the Person Act 1861

Scientists laud potentially life-changing drug for children with resistant form of epilepsy

Scientists have hailed a potentially life-changing drug for children with a hard to treat form of epilepsy, after promising early clinical trial results.Dravet syndrome is a genetic disorder which causes treatment resistant epilepsy and is often accompanied by speech and developmental delays. About 3,000 people are thought to have the condition in the UK. Current treatments aim to control the number and severity of seizures, but often do not work.These preliminary trials, led by UCL and Great Ormond Street hospital (GOSH), found that the drug appeared to be safe and well tolerated by the 81 children taking part

More than 220m children will be obese by 2040 without drastic action, report warns

Without drastic action more than 220 million children could have obesity by 2040, an international report has warned.Globally, in 2025 about 180 million children were obese. But new figures from the World Obesity Federation suggest that by 2040, about 227 million of all five- to 19-year-olds will have obesity and more than half a billion will be overweight.According to the federation’s 2026 world obesity atlas, that would mean that at least 120 million school-age children would have early signs of chronic disease caused by their high body mass index (BMI).Someone is classed as obese if their BMI is 30 or above, and overweight if it is above 25

T20 World Cup final, Six Nations, FA Cup and F1 returns – follow with us

Nine speeding tickets and counting: Myles Garrett and the illusion of invincibility | Lee Escobedo

Stakes sky high for England as Italy eye Six Nations upset for the ages | Robert Kitson

Naoya Inoue to face Junto Nakatani in historic Tokyo Dome megafight

Scotland sense chance against France to end cycle of brilliance and despair | Michael Aylwin

World Cup exit ‘a tough pill to swallow’ for England’s Jacob Bethell after maiden T20 ton