How ICE is using facial recognition in Minnesota

Immigration enforcement agents across the US are increasingly relying on a new smartphone app with facial recognition technology.The app is named Mobile Fortify.Simply pointing a phone’s camera at their intended target and scanning the person’s face allows Mobile Fortify to pull data on an individual from multiple federal and state databases, some of which federal courts have deemed too inaccurate for arrest warrants.The US Department of Homeland Security has used Mobile Fortify to scan faces and fingerprints in the field more than 100,000 times, according to a lawsuit brought by Illinois and Chicago against the federal agency, earlier this month.That’s a drastic shift from immigration enforcement’s earlier use of facial recognition technology, which was otherwise limited largely to investigations and ports of entry and exit, legal experts say.

The app’s existence was first uncovered last summer by 404 Media, through leaked emails.404 Media also reported, in October, about internal DHS documents that say people cannot refuse to be scanned by Mobile Fortify.“Here we have ICE using this technology in exactly the confluence of conditions that lead to the highest false match rates,” says Nathan Freed Wessler, deputy director of the ACLU’s speech, privacy and technology project.“A false result from this technology can turn somebody’s life totally upside down.” The larger implications for democracy are chilling, too, he notes: “ICE is effectively trying to create a biometric checkpoint society.

”Use of the app has inspired backlash on the streets, in courts, and on Capitol Hill.Protesters are using a variety of tactics to fight back.They include recording masked agents, using burner phones and donated dashboard cameras, according to the Washington Post.Underpinning resistance to ICE’s use of facial recognition are doubts about the technology’s efficacy.Research has uncovered higher error rates in identifying women and people of color than for scans of white faces.

ICE’s use of the technology is often occurring in intense and fast-moving situations, which makes misidentification more likely.Those being scanned may be people of color.They could be turning away from officers because they don’t want to be identified.The lighting could be poor.The Illinois lawsuit against the DHS takes particular issue with the federal agency’s use of Mobile Fortify and argued that the app goes far beyond what Congress allows with regards to the collection of biometric data.

The complaint cited several examples in which federal agents appeared to take photos or scans of US citizens across Illinois without their consent.Democratic lawmakers in Congress introduced a bill on 15 January that would outright ban the homeland security department from using Mobile Fortify or similar apps, except for identification at points of entry.This follows a September letter, sent by senators to ICE, asking for more information about the app and declaring that “even when accurate, this type of on-demand surveillance threatens the privacy and free speech rights of everyone in the United States”.The DHS said in a statement that Mobile Fortify does not violate constitutional rights or compromise privacy.“It operates with a deliberately high matching threshold and queries only limited CBP immigration datasets.

The application does not access open-source material, scrape social media, or rely on publicly available data,” a spokesperson said.According to 404 Media, the app’s database consists of some 200m images.“Mobile Fortify has not been blocked, restricted, or curtailed by the courts or by legal guidance.It is lawfully used nationwide in accordance with all applicable legal authorities.”Observers, experts, and at least one congressman have said federal immigration agents frequently do not ask for consent to scan a person’s face – and may dismiss other documentation that contradicts this data.

ICE has been documented using biometrics as a definitive determination of someone’s citizenship in the absence of identification.This means that ICE is not required to conduct additional vetting of facial scans or checks to avoid a misidentification.404 Media reported earlier this month that Mobile Fortify misidentified a detained woman during an immigration raid; the app came up with two different and incorrect names.“Facial recognition – to the extent it should be used at all – is really supposed to be a starting point,” says Jake Laperruque, deputy director of the security and surveillance project at the Center for Democracy and Technology (CDT).“If you treat this as an endpoint – as a definitive ID – you’re going to have errors, and you’re going to end up arresting and jailing people that are not actually who the machine says it is.

”Laperruque says that even police departments across the country have pushed back against an over-reliance on facial recognition, treating it as a lead, at most.At least 15 states are cautious about using it all, and have laws limiting police’s use of the technology.In 2019, San Francisco became the first major US city to ban facial recognition technology by police and all other local government agencies.The DHS issued a directive in September 2023 requiring that the bureau test the technology for unintended bias and offer US citizens the choice to opt out of scans not conducted by law enforcement.That directive appeared to be rescinded in February last year.

ICE’s stops – whether they involve face-scanning or not – have been subject to litigation as well.They have often been referred to as “Kavanaugh stops” after the supreme court justice wrote in a concurring opinion that Hispanic residents’ “apparent ethnicity” can be a “relevant factor” for ICE to stop them and demand for proof of citizenship.The ACLU sued the Trump administration earlier this month, accusing federal immigration authorities of racial profiling and unlawful arrests.

At Davos, tech CEOs laid out their vision for AI’s world domination

Hello, and welcome to TechScape. This week’s edition is a team effort: my colleague Heather Stewart reports on the plans for AI’s world domination at Davos; I examine how huge investments have followed AI companies with little to their names but drama and dreams; and Nick Robins-Early spotlights how lax regulation of autonomous driving in Texas allowed Tesla to thrive.When they weren’t discussing Donald Trump, delegates at the World Economic Forum last week were being dazzled by the prospects for artificial intelligence.Up and down the main street of the Swiss Alps town, almost every shopfront was temporarily emblazoned with the neon slogan of a tech firm – or a consultancy promising to tell executives how to incorporate AI into their business. Cloudflare’s wood-panelled HQ urged delegates to “connect, protect and build together”, and Wipro’s shouted: “Dream Solve Prove Repeat

‘Wake up to the risks of AI, they are almost here,’ Anthropic boss warns

Humanity is entering a phase of artificial intelligence development that will “test who we are as a species”, the boss of the AI startup Anthropic has said, arguing that the world needs to “wake up” to the risks.Dario Amodei, a co-founder and the chief executive of the company behind the hit chatbot Claude, voiced his fears in a 19,000-word essay titled “The adolescence of technology”.Describing the arrival of highly powerful AI systems as potentially imminent, he wrote: “I believe we are entering a rite of passage, both turbulent and inevitable, which will test who we are as a species.”Amodei added: “Humanity is about to be handed almost unimaginable power, and it is deeply unclear whether our social, political, and technological systems possess the maturity to wield it.”The tech entrepreneur, whose company is reportedly worth $350bn (£255bn), said his essay was an attempt to “jolt people awake” because the world needed to “wake up” to the need for action on AI safety

Tech giants head to landmark US trial over social media addiction claims

For the first time, a huge group of parents, teens and school districts is taking on the world’s most powerful social media companies in open court, accusing the tech giants of intentionally designing their products to be addictive. The blockbuster legal proceedings may see multiple CEOs, including Meta’s Mark Zuckerberg, face harsh questioning.A long-awaited series of trials kicks off in Los Angeles superior court on Tuesday, in which hundreds of US families will allege that Meta, Snap, TikTok and YouTube’s platforms harm children. Once young people are hooked, the plaintiffs allege, they fall prey to depression, eating disorders, self-harm and other mental health issues. Approximately 1,600 plaintiffs are included in the proceedings, involving more than 350 families and 250 school districts

California governor Gavin Newsom accuses TikTok of suppressing content critical of Trump

California governor Gavin Newsom has accused TikTok of suppressing content critical of president Donald Trump, as he launched a review of the platform’s content moderation practices to determine if they violated state law, even as the platform blamed a systems failure for the issues.The step comes after TikTok’s Chinese owner, ByteDance, said last week it had finalised a deal to set up a majority US-owned joint venture that will secure US data, to avoid a US ban on the short video app used by more than 200 million Americans.“Following TikTok’s sale to a Trump-aligned business group, our office has received reports, and independently confirmed instances, of suppressed content critical of President Trump,” Newsom’s office said on X on Monday, without elaborating.“Gavin Newsom is launching a review of this conduct and is calling on the California Department of Justice to determine whether it violates California law,” it added.In response, a representative for the the joint venture for TikTok in the US pointed to a prior statement that blamed a data centre power outage, adding, “It would be inaccurate to report that this is anything but the technical issues we’ve transparently confirmed

Georgia leads push to ban datacenters used to power America’s AI boom

Lawmakers in several states are exploring passing laws that would put statewide bans in place on building new datacenters as the issue of the power-hungry facilities has moved to the center of economic and environmental concerns in the US.In Georgia a state lawmaker has introduced a bill proposing what could become the first statewide moratorium on new datacenters in America. The bill is one of at least three statewide moratoriums on datacenters introduced in state legislatures in the last week as Maryland and Oklahoma lawmakers are also considering similar measures.But it is Georgia that is quickly becoming ground zero in the fight against untrammelled growth of datacenters – which are notorious for using huge amounts of energy and water – as they power the emerging industry of artificial intelligence.The Georgia bill seeks to halt all such projects until March of next year “to allow state, county and municipal-level officials time to set necessary policies for regulating datacenters … which permanently alter the landscape of our state”, said bill sponsor and state Democratic legislator Ruwa Romman

EU launches inquiry into X over sexually explicit images made by Grok AI

The European Commission has launched an investigation into Elon Musk’s X over the production of sexually explicit images and the spreading of possible child sexual abuse material by the platform’s AI chatbot, Grok.The formal inquiry, launched on Monday, also extends an investigation into X’s recommender systems, algorithms that help users discover new content.Grok has sparked international outrage by allowing users to digitally strip women and children and put them into provocative poses. Grok AI generated about 3m sexualised images in less than two weeks, including 23,000 that appeared to depict children, according to researchers at the Center for Countering Digital Hate.The commission said its investigation would “assess whether the company properly assessed and mitigated risks” stemming from Grok’s functionalities in the EU, including risks on the sharing of illegal content such as manipulated sexually explicit images and “content that may amount to” child sexual abuse material

U-turn on pubs has not solved the government’s mess on business rates | Nils Pratley

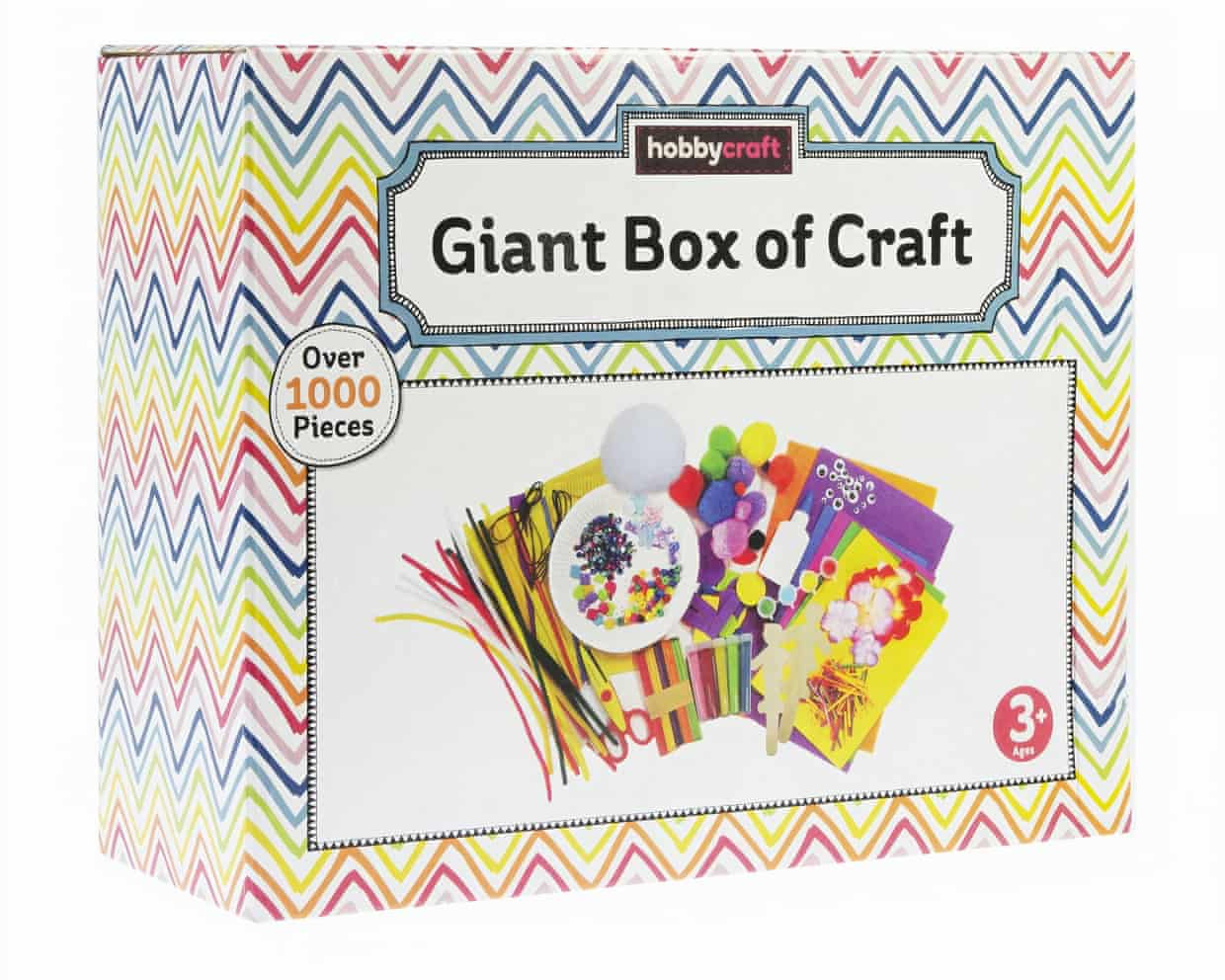

Hobbycraft issues full recall of asbestos-tainted children’s play sand

‘It’s a hospitality-wide problem’: night-time traders react to business rates relief plan

Pubs and live music venues to get support after business rates backlash

Treasury announces business rate support package worth more than £80m a year – as it happened

Can’t decide on a food delivery? Just Eat launches AI chatbot to help you choose