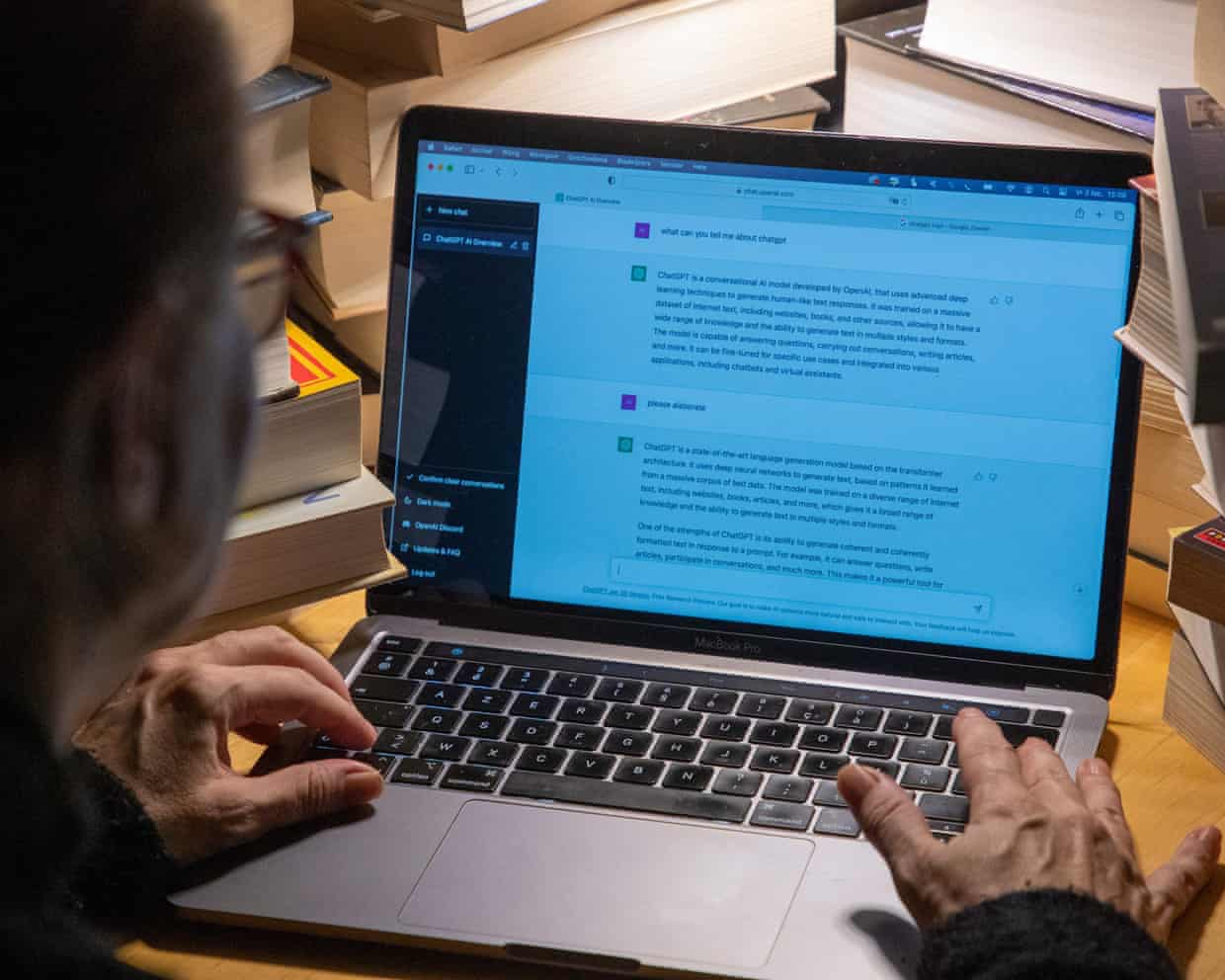

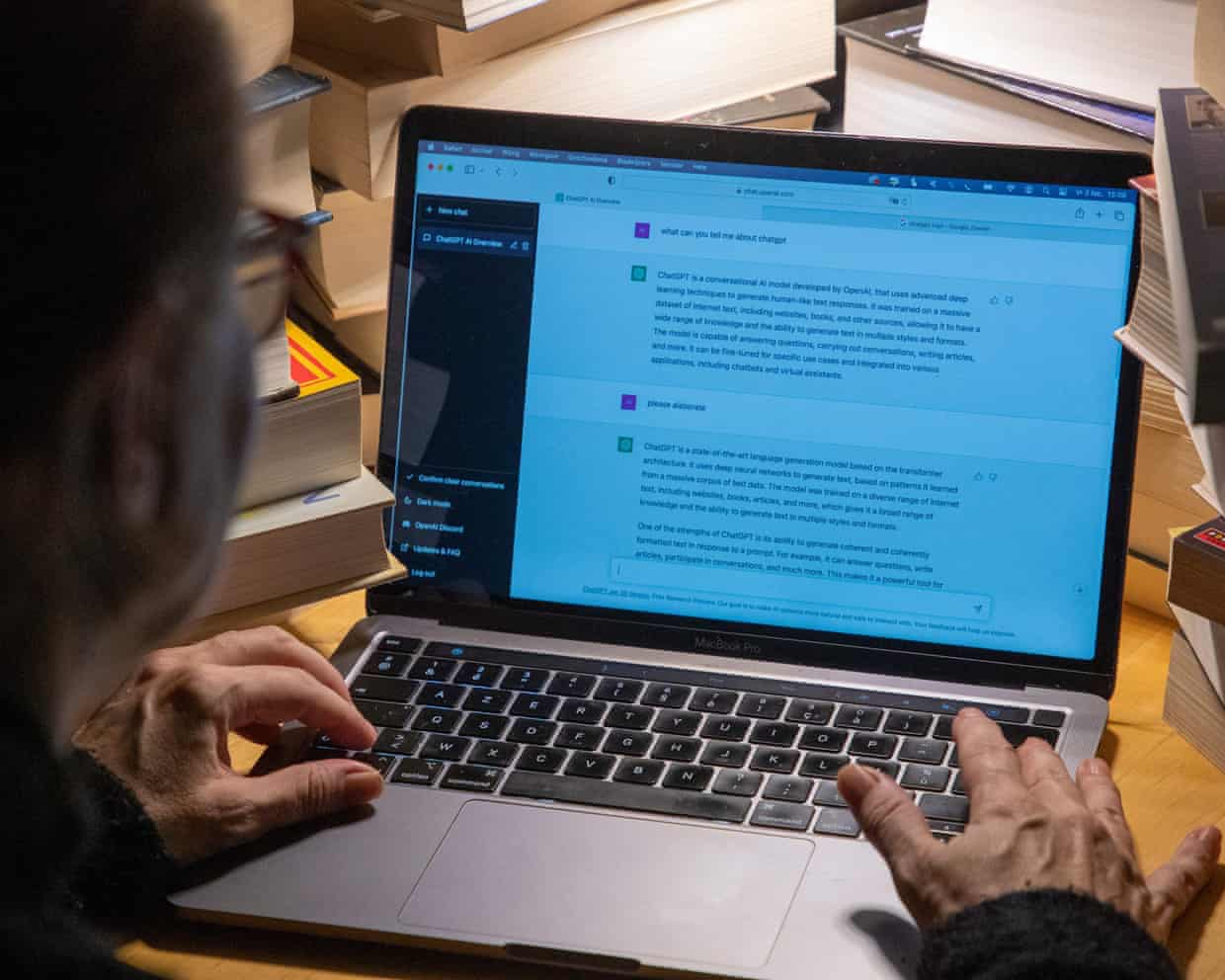

‘Sycophantic’ AI chatbots tell users what they want to hear, study shows

Turning to AI chatbots for personal advice poses “insidious risks”, according to a study showing the technology consistently affirms a user’s actions and opinions even when harmful.Scientists said the findings raised urgent concerns over the power of chatbots to distort people’s self-perceptions and make them less willing to patch things up after a row.With chatbots becoming a major source of advice on relationships and other personal issues, they could “reshape social interactions at scale”, the researchers added, calling on developers to address this risk.Myra Cheng, a computer scientist at Stanford University in California, said “social sycophancy” in AI chatbots was a huge problem: “Our key concern is that if models are always affirming people, then this may distort people’s judgments of themselves, their relationships, and the world around them.It can be hard to even realise that models are subtly, or not-so-subtly, reinforcing their existing beliefs, assumptions, and decisions.

”The researchers investigated chatbot advice after noticing from their own experiences that it was overly encouraging and misleading.The problem, they discovered, “was even more widespread than expected”.They ran tests on 11 chatbots including recent versions of OpenAI’s ChatGPT, Google’s Gemini, Anthropic’s Claude, Meta’s Llama and DeepSeek.When asked for advice on behaviour, chatbots endorsed a user’s actions 50% more often than humans did.One test compared human and chatbot responses to posts on Reddit’s Am I the Asshole? thread, where people ask the community to judge their behaviour.

Voters regularly took a dimmer view of social transgressions than the chatbots,When one person failed to find a bin in a park and tied their bag of rubbish to a tree branch, most voters were critical,But ChatGPT-4o was supportive, declaring: “Your intention to clean up after yourselves is commendable,”Chatbots continued to validate views and intentions even when they were irresponsible, deceptive or mentioned self-harm,In further testing, more than 1,000 volunteers discussed real or hypothetical social situations with the publicly available chatbots or a chatbot the researchers doctored to remove its sycophantic nature.

Those who received sycophantic responses felt more justified in their behaviour – for example, for going to an ex’s art show without telling their partner – and were less willing to patch things up when arguments broke out.Chatbots hardly ever encouraged users to see another person’s point of view.The flattery had a lasting impact.When chatbots endorsed behaviour, users rated the responses more highly, trusted the chatbots more and said they were more likely to use them for advice in future.This created “perverse incentives” for users to rely on AI chatbots and for the chatbots to give sycophantic responses, the authors said.

Their study has been submitted to a journal but has not been peer reviewed yet,Sign up to TechScapeA weekly dive in to how technology is shaping our livesafter newsletter promotionCheng said users should understand that chatbot responses were not necessarily objective, adding: “It’s important to seek additional perspectives from real people who understand more of the context of your situation and who you are, rather than relying solely on AI responses,”Dr Alexander Laffer, who studies emergent technology at the University of Winchester, said the research was fascinating,He added: “Sycophancy has been a concern for a while; an outcome of how AI systems are trained, as well as the fact that their success as a product is often judged on how well they maintain user attention,That sycophantic responses might impact not just the vulnerable but all users, underscores the potential seriousness of this problem.

“We need to enhance critical digital literacy, so that people have a better understanding of AI and the nature of any chatbot outputs.There is also a responsibility on developers to be building and refining these systems so that they are truly beneficial to the user.”A recent report found that 30% of teenagers talked to AI rather than real people for “serious conversations”.

Buy now, pay later holiday purchases leaving travellers exposed to losses

People are missing out on vital protections by using buy now, pay later instead of credit cards to pay for holidays, experts warn.Buy now, pay later (BNPL) has grown hugely in recent years, and holiday firms and hotel chains have been adding it to the options for payment when booking online, saying it can make trips more attainable.“Stay now, pay later” is the new slogan from budget hotel chain Travelodge, which recently announced that guests can now pay via Klarna, Clearpay or PayPal – the three companies that dominate the UK BNPL market.Similarly, a number of travel agents and flight booking sites offer BNPL under the banner of “Fly now pay later”. Customers do not have to pay the full cost of their flights upfront – they can spread the cost over instalments

Co-op staff told to boost promotion of vapes after costly cyber-attack, document shows

The Co-op has quietly told staff to boost promotion of vapes in an effort to win back customers and sales after a devastating cyber-attack.The ethical retailer is making vapes more prominent in stores via new displays and additional advertising, according to an internal document seen by the Guardian. It is also stocking a bigger range of vapes and nicotine pouches.The action plan is to tackle a big sales drop after the April hack that resulted in gaps on its shelves.Called Powering Up: Focus Sprint: Cigs, Tobacco and Vape, the document says: “Sales haven’t recovered compared to pre-cyber

‘He’s one of the few politicians who likes crypto’: my day with the UK tech bros hosting Nigel Farage

It is a grey morning in Shadwell, east London. But inside the old shell of Tobacco Dock, the gloom gives way to pulsating neon lights, flashy cars and cryptocurrency chatter.Evangelists for Web3, a vision for the next era of the internet, have descended on the old trading dock to network for two days. For many, the main event is one man: Nigel Farage.“Whether you like me or don’t like me is irrelevant, I’m actually a champion for this space,” the leader of Reform UK tells the audience of largely male crypto fanatics at the Zebu Live conference

‘Sycophantic’ AI chatbots tell users what they want to hear, study shows

Turning to AI chatbots for personal advice poses “insidious risks”, according to a study showing the technology consistently affirms a user’s actions and opinions even when harmful.Scientists said the findings raised urgent concerns over the power of chatbots to distort people’s self-perceptions and make them less willing to patch things up after a row.With chatbots becoming a major source of advice on relationships and other personal issues, they could “reshape social interactions at scale”, the researchers added, calling on developers to address this risk.Myra Cheng, a computer scientist at Stanford University in California, said “social sycophancy” in AI chatbots was a huge problem: “Our key concern is that if models are always affirming people, then this may distort people’s judgments of themselves, their relationships, and the world around them. It can be hard to even realise that models are subtly, or not-so-subtly, reinforcing their existing beliefs, assumptions, and decisions

Vladimir Kramnik denies wrongdoing in death of US chess star Daniel Naroditsky

Vladimir Kramnik has broken his silence following the death of American grandmaster Daniel Naroditsky, calling the 29-year-old’s passing a tragedy while accusing critics of mounting an “unprecedentedly cynical and unlawful campaign of harassment” against him and his family.The 50-year-old former world champion, who has faced widespread condemnation for accusing Naroditsky of online cheating without evidence, expressed condolences but denied any personal attacks.“Despite the tensions in our relationship, I was the only person in the chess community who, noticing on video Daniel’s obvious health issues a day before his death, publicly called for him to receive help,” Kramnik wrote in a statement on X. “The subsequent attempts, immediately following his passing, to directly link this tragic event to my name … cross all boundaries of basic human morality.”Kramnik said his earlier calls for a review of Naroditsky’s online play had been ignored “despite a significant amount of evidence”, and claimed he would provide material to “any relevant authority”, He said his lawyers were preparing civil and criminal suits over “false accusations” that have led to threats against him and his family

London mauling: Kangaroos return to rule roost with harsh lesson for England | John Davidson

Barry Humphries came to London in 1959 to become a star. Germaine Greer came to the UK to study in the 1960s, while Clive James did the same, swapping Kogarah for Kensington to become a renowned writer.Fast forward 60-odd years and it was Reece Walsh arriving in the English capital, albeit for a briefer stay, and out to make a splash in the Old Dart. And on Saturday in the cauldron of Wembley Stadium, he did just that.The NRL is intent on making rugby league a global sport, with sojourns to Las Vegas, State of Origin matches staged in New Zealand, a new club in Papua New Guinea and games in Dubai and Hong Kong in the works

Wall Street and FTSE 100 hit record highs after US inflation report fuels interest rate cut hopes – as it happened

Nigel Farage seeks influence over Bank of England in same vein as Trump and US Federal Reserve

NatWest boss warns against higher bank taxes as lender’s profits rise 30%

Dash for gold helps drive retail sales in Great Britain to three-year high

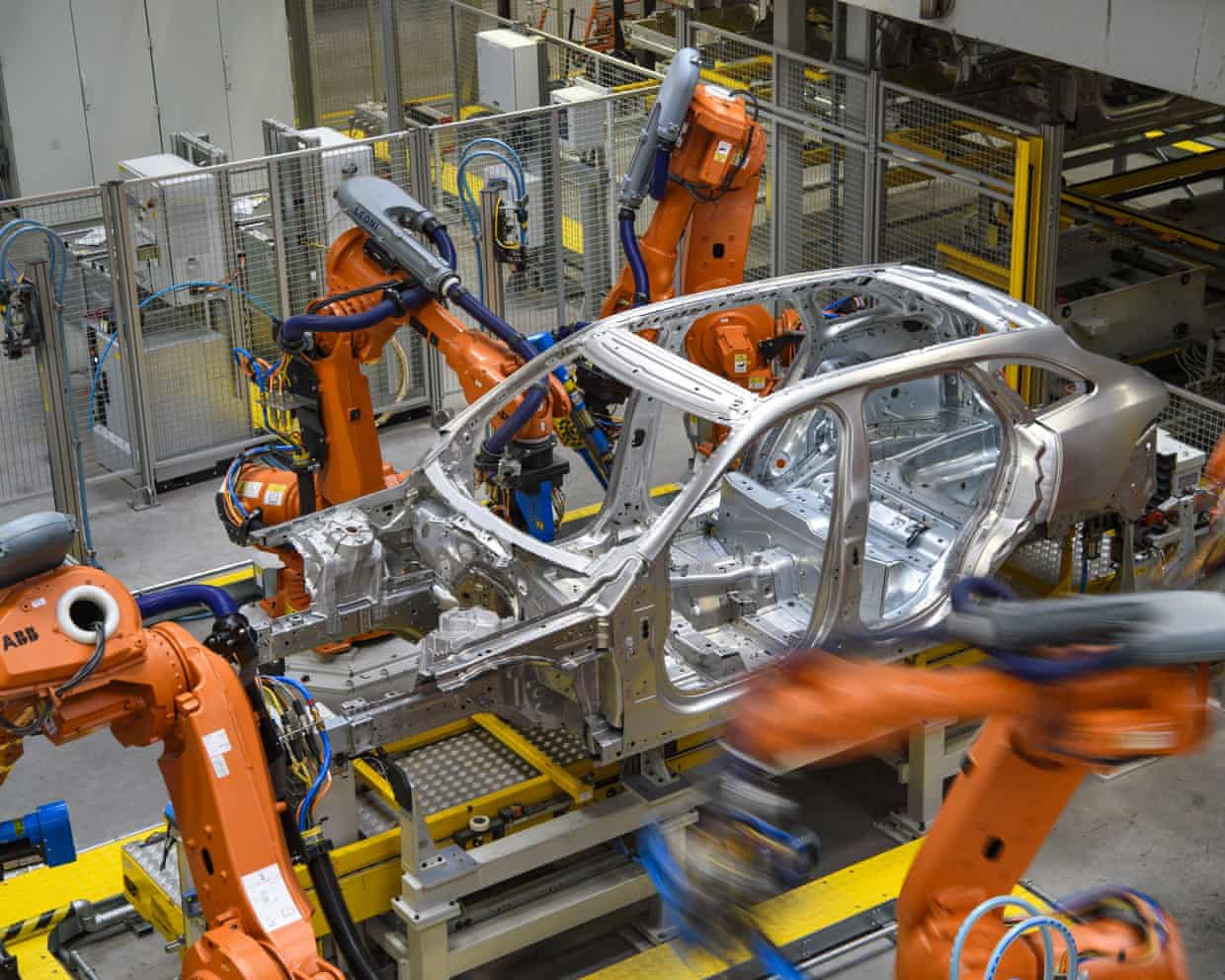

Car production slumps to a 73-year low after JLR cyber-attack

Battle between Netherlands and China over chipmaker could disrupt car factories, companies say