Hundreds of nonconsensual AI images being created by Grok on X, data shows

New research that samples X users prompting Elon Musk’s AI chatbot Grok demonstrates how frequently people are creating sexualized images with it.Nearly three-quarters of posts collected and analyzed by a PhD researcher at Dublin’s Trinity College were requests for nonconsensual images of real women or minors with items of clothing removed or added.The posts offer a new level of detail on how the images are generated and shared on X, with users coaching one another on prompts; suggesting iterations on Grok’s presentations of women in lingerie or swimsuits, or with areas of their body covered in semen; and asking Grok to remove outer clothing in replies to posts containing self-portraits by female users.Among hundreds of posts identified by Nana Nwachukwu as direct, nonconsensual requests for Grok to remove or replace clothing, dozens reviewed by the Guardian show users posting pictures of women including celebrities, models, stock photos and women who are not public figures posing in snapshots.Several posts in the trove reviewed by the Guardian have received tens of thousands of impressions and come from premium, “blue check” accounts, including accounts with tens of thousands of followers.

Premium accounts with more than 500 followers and 5m impressions over three months are eligible for revenue-sharing under X’s eligibility rules.In one Christmas Day post, an account with more than 93,000 followers presented side-by-side images of an unknown woman’s backside with the caption: “Told Grok to make her butt even bigger and switch leopard print to USA print.2nd pic I just told it to add cum on her ass lmao.”A 3 January post, representative of dozens reviewed by the Guardian, captioned an apparent holiday snap of an unknown woman: “@grok replace give her a dental floss bikini.” Within two minutes, Grok provided a photorealistic image that satisfied the request.

Other posts in the trove show more sophisticated employment of JSON-prompt engineering to induce Grok to generate novel sexualized images of fictitious women,The data does not cover all such requests made to Grok,While content analysis firm Copyleaks reported on 31 December that X users were generating “roughly one nonconsensual sexualized image per minute”, Nwachukwu said that her sample is limited to just more than 500 posts she was able to collect with X’s API via a developer account,She said that the true scale “could be thousands, it could be hundreds of thousands” but that changes made by Musk to the API mean that “it is much harder to see what is happening” on the platform,On Wednesday, Bloomberg News cited researchers who found that Grok users were generating up to 6,700 undressed images per hour.

Nwachukwu, an expert on AI governance and a longtime observer of and participant in social media safety initiatives, said that she first noticed requests along these lines from X users back in 2023,At the time, she said, “Grok did not oblige the requests,It wasn’t really good at doing those things,” The bot’s responses began changing in 2024, and reached a critical mass late last year,In October 2025, she noticed that “people were putting Halloween attire on themselves using Grok.

Of course, a section of users realized we can also ask it to change what other people are wearing.” By year’s end, “there was a huge uptick in people asking Grok to put different people in bikinis or other types of suggestive clothing”.There were other indications last year of an increased willingness to tolerate or even encourage the generation of sexually suggestive material with Grok.In August, xAI incorporated a “spicy mode” setting in the mobile version of Grok’s text-to-video generation tool, leading the Verge to characterize it as “a service designed specifically to make suggestive videos”.Nwachukwu’s data is just the latest indication of how the platform under Musk has become a magnet for forms of content that other platforms work to exclude, including hate speech, gore content and copyrighted material.

On Friday, Grok issued a bizarre public apology over the incident on X, claiming that “xAI is implementing stronger safeguards to prevent this”.On Tuesday, X Safety posted a promise to ban users who shared child sexual abuse material (CSAM).Musk himself said: “Anyone using or prompting Grok to make illegal content will suffer the same consequences as if they upload illegal content.”Nwachukwu said, however, that posts like those she has already collected are still appearing on the platform.Musk is giving “the middle finger to everyone who has asked for the platform to be moderated”, she said.

The billionaire slashed Twitter’s trust and safety teams when he took over in 2022.She added that other AI chatbots do not have the same issues.“Other generative AI platforms – ChatGPT or Gemini – they have safeguards,” she said.“If you ask them to generate something that looks like a person, it would never be a depiction of that person.They don’t generate depictions of real human beings.

”The revelations about the nonconsensual imagery on X has already drawn the attention of regulators in the UK, Europe, India, and Australia.Nwachukwu, who is from Nigeria, pointed to a specific harm being done in the posts to “women from conservative societies”.“There’s a lot of targeting of people from conservative beliefs, conservative societies: west Africa, south Asia.This represents a different kind of harm for them,” she said.

Thousands of offenders in England to get health support at probation meetings

About 4,000 offenders in England will get targeted healthcare sessions during their probation appointments as part of a new pilot scheme.Offenders are far more likely to have poor physical or mental health or addiction issues, which increases the likelihood of reoffending.A recent report by the chief medical officer for England, Chris Whitty, found that half of offenders on probation smoked, many had drug or alcohol addiction issues and a majority had poor mental health. They were also less likely to receive screening for prostate, breast, lung or cervical cancers.Many offenders do not receive timely care because they are not registered with a GP, meaning often they seek help for any physical or mental health problems only when their symptoms have become acute, turning to A&E

Under-pressure charities face conflicting demands | Letters

Your editorial on charities makes many useful points about their contribution to social life and appropriately highlights the harsh nature of the current funding environment (The Guardian view on hard times for Britain’s charities: struggling to do more with less, 31 December).However, it is overoptimistic about the ability of charities to resist capture by funders when you state that “their priorities are not distorted by the profit-seeking motives of market-based providers”. This is true, but their priorities are frequently distorted by the requirements of their funders. In a target-driven society, funders – state, corporate or charitable – have their own performance indicators to meet.Consequently, as our own research has demonstrated, charity organisations often cannot access funding for the expressed needs of their members and user groups

A rare joy at police station in Huyton | Brief letters

Your letters on the kindness of strangers (2 January) took me back to my days as a duty sergeant at Huyton in the 1990s. A man walked into the station with more than £1,000 in a cash bag he’d found by a bank’s night safe. As we gathered round, another chap burst in, white as a sheet – he’d absent‑mindedly walked off and left the day’s takings behind. Reuniting the two was a rare joy: a small reminder that people can still surprise you. Terry O’Hara Liverpool I don’t like to pick holes in your inspiring article on communities supporting refugees (Report, 7 January), but Ashbourne is not in “the north of England”

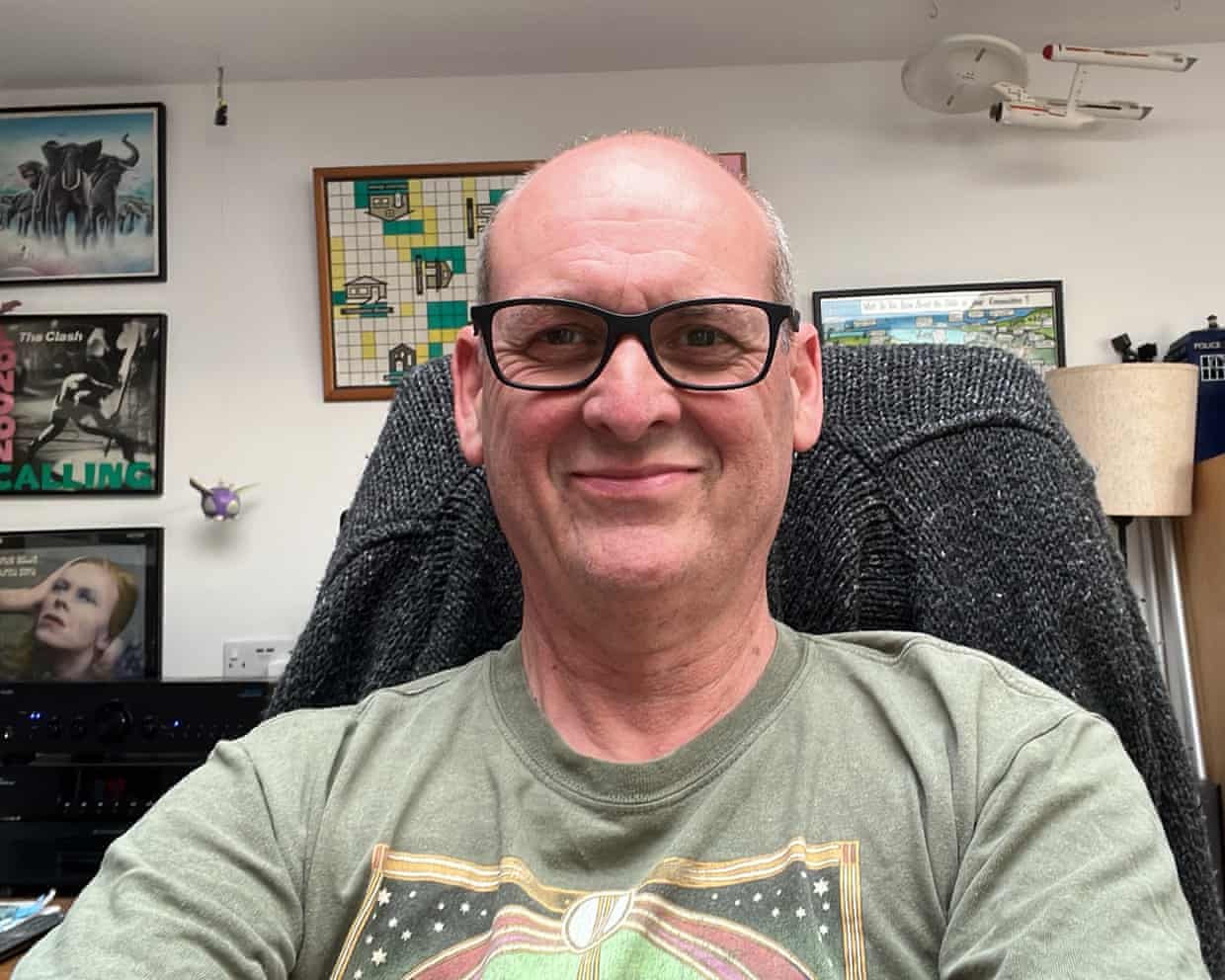

Jim Thomas obituary

My father, Jim Thomas, who has died of cancer aged 61, started out on his career in health and social care as a community nurse in East Anglia in 1986, and worked his way up to be head of workforce capacity and transformation at the charity Skills for Care, where he was employed from 2007 to 2022. Throughout his career, Jim fought for people to have more control over their care, and he had a deep suspicion of authority and rules for the sake of rules. He was a lateral thinker who cut through the jargon and asked: what do people actually need?As a young nurse, he was asked to convince an elderly man to move into sheltered housing. But he quickly realised that this man enjoyed living in his isolated countryside home with many cats, a long-drop toilet and a water supply from a nearby stream. To keep the authorities at bay, Jim persuaded the man to connect the house to mains water and get a flushable toilet and litter trays

Guardian readers raise more than £850,000 as charity appeal enters final days

The Guardian’s Hope appeal has so far raised more than £850,000 thanks to generous readers’ continuing support for our five inspirational charity partners, whose work aims to tackle division, racism and hatred.The 2025 Guardian appeal is raising funds for five charities: Citizens UK, the Linking Network, Locality, Hope Unlimited Charitable Trust and Who is Your Neighbour?.The Hope appeal, entering its final few days, is aiming to raise £1m for grassroots voluntary organisations campaigning against extremism, violence and harassment, anti-migrant rhetoric, and the re-emergence of “1970s-style racism”.The appeal has struck a chord with thousands of readers. One emailed us to say: “I am so worried about the division being sown between people in the UK

Hospital patients collapsing while out of sight on corridors, NHS watchdog says

Patients are collapsing in hospitals unseen by staff because overcrowding means they are stranded out of sight on corridors, the NHS’s safety watchdog has revealed.Using corridors, storerooms and gyms as extra care areas poses serious risks to patients, including falls, infections and a lack of oxygen, the Health Services Safety Investigations Body (HSSIB) said.NHS staff told investigators that some patients who end up on a trolley or bed in overflow areas have not been assessed or started treatment “and so may be at increased risk of deterioration, which may go unnoticed or be detected late in a temporary care environment,” HSSIB’s report said.It highlighted that patients in these areas are at risk of not getting prompt attention if they deteriorate and suffer a medical emergency.“Several nurses shared a patient safety concern around calling for help and responding to a medical emergency in temporary care environments,” the report said

US economy added fewer jobs than forecast in December, but January interest rate cut very unlikely – as it happened

High costs, falling returns: what could go wrong for Trump’s Venezuela oil gamble?

X UK revenues drop nearly 60% in a year as content concerns spook advertisers

Spotify no longer running ICE recruitment ads, after US government campaign ends

All-heart Travis Head leaves indelible mark on Ashes series by playing his own game | Angus Fontaine

Big Bash League momentum builds but its future remains up in the air | Jack Snape