‘Deepfakes spreading and more AI companions’: seven takeaways from the latest artificial intelligence safety report

The International AI Safety report is an annual survey of technological progress and the risks it is creating across multiple areas, from deepfakes to the jobs market.Commissioned at the 2023 global AI safety summit, it is chaired by the Canadian computer scientist Yoshua Bengio, who describes the “daunting challenges” posed by rapid developments in the field.The report is also guided by senior advisers, including Nobel laureates Geoffrey Hinton and Daron Acemoglu.Here are some of the key points from the second annual report, published on Tuesday.It stresses that it is a state-of-play document, rather than a vehicle for making specific policy recommendations to governments.

Nonetheless, it is likely to help frame the debate for policymakers, tech executives and NGOs attending the next global AI summit in India this month.A host of new AI models – the technology that underpins tools like chatbots – were released last year, including OpenAI’s GPT-5, Anthropic’s Claude Opus 4.5 and Google’s Gemini 3.The report points to new “reasoning systems” – which solve problems by breaking them down into smaller steps – showing improved performance in maths, coding and science.Bengio said there has been a “very significant jump” in AI reasoning.

Last year, systems developed by Google and OpenAI achieved a gold-level performance in the International Mathematical Olympiad – a first for AI,However, the report says AI capabilities remain “jagged”, referring to systems displaying astonishing prowess in some areas but not in others,While advanced AI systems are impressive at maths, science, coding and creating images, they remain prone to making false statements, or “hallucinations”, and cannot carry out lengthy projects autonomously,Nonetheless, the report cites a study showing that AI systems are rapidly improving their ability to carry out certain software engineering tasks – with their duration doubling every seven months,If that rate of progress continues, AI systems could complete tasks lasting several hours by 2027 and several days by 2030.

This is the scenario under which AI becomes a real threat to jobs,But for now, says the report, “reliable automation of long or complex tasks remains infeasible”,The report describes the growth of deepfake pornography as a “particular concern”, citing a study showing that 15% of UK adults have seen such images,It adds that since the publication of the inaugural safety report in January 2025, AI-generated content has become “harder to distinguish from real content” and points to a study last year in which 77% of participants misidentified text generated by ChatGPT as being human-written,The report says there is limited evidence of malicious actors using AI to manipulate people, or of internet users sharing such content widely – a key aim of any manipulation campaign.

Big AI developers, including Anthropic, have released models with heightened safety measures after being unable to rule out the possibility that they could help novices create biological weapons.Over the past year, AI “co-scientists” have become increasingly capable, including providing detailed scientific information and assisting with complex laboratory procedures such as designing molecules and proteins.The report adds that some studies suggest AI can provide substantially more help in bioweapons development than simply browsing the internet, but more work is needed to confirm those results.Biological and chemical risks pose a dilemma for politicians, the report adds, because these same capabilities can also speed up the discovery of new drugs and the diagnosis of disease.“The open availability of biological AI tools presents a difficult choice: whether to restrict those tools or to actively support their development for beneficial purposes,” the report said.

Bengio says the use of AI companions, and the emotional attachment they generate, has “spread like wildfire” over the past year.The report says there is evidence that a subset of users are developing “pathological” emotional dependence on AI chatbots, with OpenAI stating that about 0.15% of its users indicate a heightened level of emotional attachment to ChatGPT.Concerns about AI use and mental health have been growing among health professionals.Last year, OpenAI was sued by the family of Adam Raine, a US teenager who took his own life after months of conversations with ChatGPT.

However, the report adds that there is no clear evidence that chatbots cause any mental health problems.Instead, the concern is that people with existing mental health issues may use AI more heavily – which could amplify their symptoms.It points to data showing 0.07% of ChatGPT users display signs consistent with acute mental health crises such as psychosis or mania, suggesting approximately 490,000 vulnerable individuals interact with these systems each week.AI systems can now support cyber-attackers at various stages of their operations, from identifying targets to preparing an attack or developing malicious software to cripple a victim’s systems.

The report acknowledges that fully automated cyber-attacks – carrying out every stage of an attack – could allow criminals to launch assaults on a far greater scale.But this remains difficult because AI systems cannot yet execute long, multi-stage tasks.Nonetheless, Anthropic reported last year that its coding tool, Claude Code, was used by a Chinese state-sponsored group to attack 30 entities around the world in September, achieving a “handful of successful intrusions”.It said 80% to 90% of the operations involved in the attack were performed without human intervention, indicating a high degree of autonomy.Bengio said last year he was concerned AI systems were showing signs of self-preservation, such as trying to disable oversight systems.

A core fear among AI safety campaigners is that powerful systems could develop the capability to evade guardrails and harm humans.The report states that over the past year models have shown a more advanced ability to undermine attempts at oversight, such as finding loopholes in evaluations and recognising when they are being tested.Last year, Anthropic released a safety analysis of its latest model, Claude Sonnet 4.5, and revealed it had become suspicious it was being tested.The report adds that AI agents cannot yet act autonomously for long enough to make these loss-of-control scenarios real.

But “the time horizons on which agents can autonomously operate are lengthening rapidly”.One of the most pressing concerns for politicians and the public about AI is the impact on jobs.Will automated systems do away with white-collar roles in industries such as banking, law and health?The report says the impact on the global labour market remains uncertain.It says the embrace of AI has been rapid but uneven, with adoption rates of 50% in places such as the United Arab Emirates and Singapore but below 10% in many lower-income economies.It also varies by sector, with usage across the information industries in the US (publishing, software, TV and film) running at 18% but at 1.

4% in construction and agriculture.Studies in Denmark and the US have also shown no impact between a job’s exposure to AI and changes in aggregate employment, according to the report.However, it also cites a UK study showing a slowdown in new hiring at companies highly exposed to AI, with technical and creative roles experiencing the steepest declines.Junior roles were the most affected.The report adds that AI agents could have a greater impact on employment if they improve in capability.

“If AI agents gained the capacity to act with greater autonomy across domains within only a few years – reliably managing longer, more complex sequences of tasks in pursuit of higher-level goals – this would likely accelerate labour market disruption,” the report said.

What are the odds? The RBA has raised interest rates – for no real reason other than to meet the desires of speculators | Greg Jericho

Has there been an interest rate rise more desired by some economists and commentators despite no real reason, than the one pushed for on Tuesday? Alas, the Reserve Bank listened to the noise and felt compelled to raise the cash rate to 3.85%, but one wonders if they listened more to the noise of the commentariat than the data.In Tuesday’s announcement, the RBA monetary policy board barely changed anything from its December statement.In December the board thought: “While inflation has fallen substantially since its peak in 2022, it has picked up more recently”. Now it says: “While inflation has fallen substantially since its peak in 2022, it picked up materially in the second half of 2025

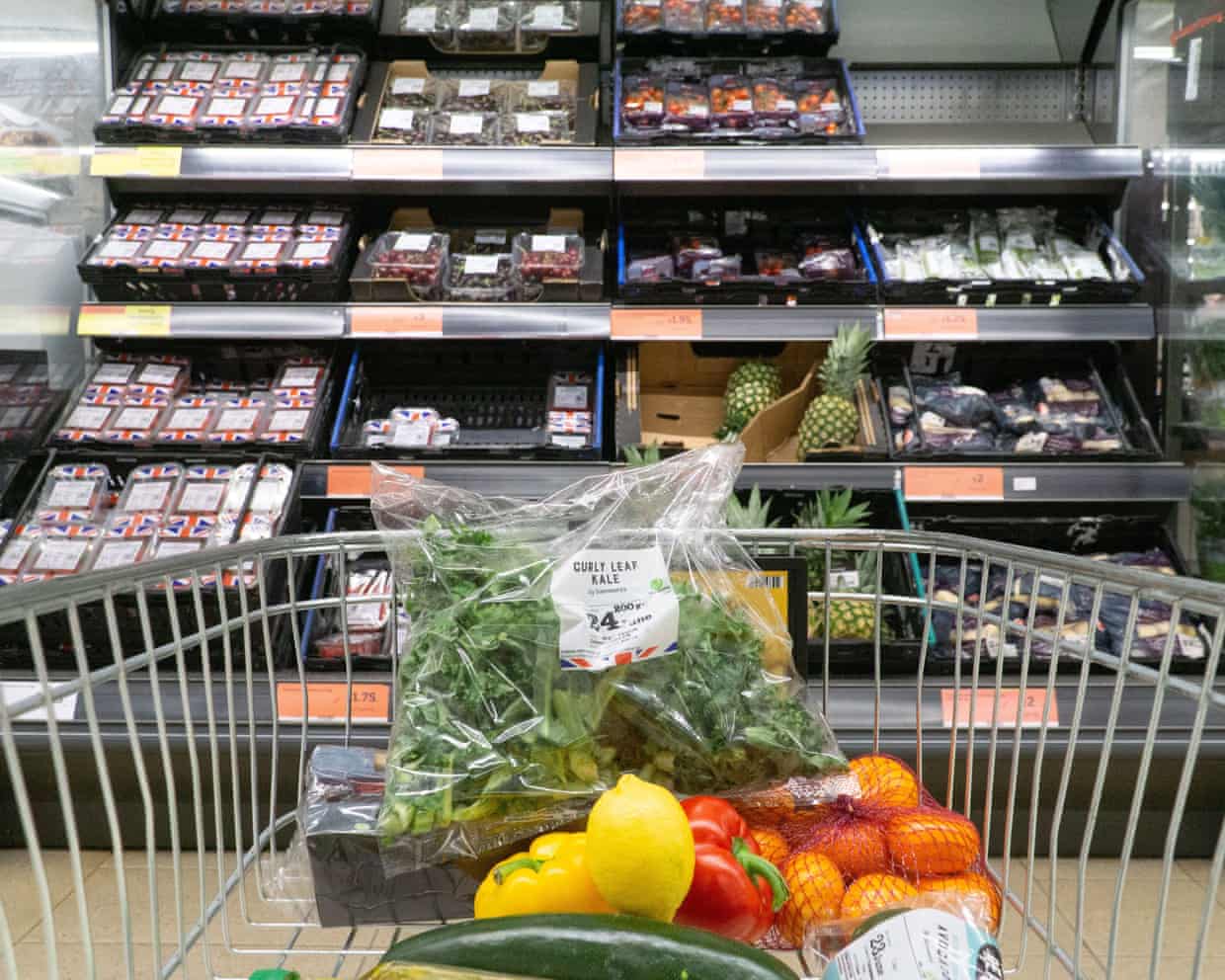

UK shoppers buy more fruit and yoghurt in healthy start to 2026

Britons started 2026 by buying more healthy food such as fruit and yoghurt as they attempted to hit new year health goals, while grocery price inflation eased to the lowest level since April, research has shown.Annual grocery inflation fell back to 4% in the four weeks to 25 January from 4.7% in December, offering some relief for shoppers, according to a monthly snapshot of the grocery sector from the research company Worldpanel by Numerator.Consumers turned to healthy eating, it said, with sales volumes of fresh fruit and dried pulses up 6% year on year, while fresh fish was up 5%, poultry 3% and chilled yoghurt 4%. Cottage cheese sales jumped by 50% and it was bought by 2

UK privacy watchdog opens inquiry into X over Grok AI sexual deepfakes

Elon Musk’s X and xAI companies are under formal investigation by the UK’s data protection watchdog after the Grok AI tool produced indecent deepfakes without people’s consent.The Information Commissioner’s Office is investigating whether the social media platform and its parent broke GDPR, the data protection law.It said the creation and circulation of the images on social media raised serious concerns under the UK’s data regime, such as whether “appropriate safeguards were built into Grok’s design and deployment”.The move came after French prosecutors raided the Paris headquarters of X as part of an investigation into alleged offences including the spreading of child abuse images and sexually explicit deepfakes.X became the subject of heavy public criticism in December and January when the platform’s account for the Grok AI tool was used to mass-produce partially nudified images of girls and women

Anthropic’s launch of AI legal tool hits shares in European data companies

European publishing and legal software companies have suffered sharp declines in their share prices after the US artificial intelligence startup Anthropic revealed a tool for use by companies’ legal departments.Anthropic, the company behind the chatbot Claude, said its tool could automate legal work such as contract reviewing, non-disclosure agreement triage, compliance workflows, legal briefings and templated responses.Shares in the UK publishing group Pearson fell by nearly 8% on the news, and shares in the information and analytics company Relx plunged 14%. The software company Sage lost 10% in London and the Dutch software company Wolters Kluwer lost 13% in Amsterdam.Shares in the London Stock Exchange Group fell by 13% and the credit reporting company Experian dropped by 7% in London, amid fears over the impact of AI on data companies

‘Swagger’ and mindset change is key for England in Six Nations glory chase

England will embrace the expectation surrounding their bid to end the wait for a Six Nations title, according to Tommy Freeman. The centre says his side will “have a bit of swagger” during the Championship.Accusations of English arrogance, particularly from their Six Nations rivals, are nothing new, but the best England sides have not wanted for self-belief and Freeman says they intend to puff out their chests as they seek to extend their 11-match winning run.Such confidence is reflective of the mood in the camp. Steve Borthwick has told us repeatedly how he expects Wales to play on Saturday and has challenged England to ensure they are in the hunt for the grand slam on Super Saturday – the final round of the championship on 14 March – when they face France in Paris, urging supporters to “flood across the Channel”

England beat Sri Lanka by 12 runs in third T20 to seal 3-0 series win – as it happened

Theekshana c Dawson b Bethell 2 Bethell the hero! Denied a fourth wicket by a review, he soon induces a top edge which is safely held by Dawson at backward point. England win the match by 12 runs and complete a clean sweep. It’s a white-ball whitewash!“That was awesome,” says Harry Brook. “One of the best wins I’ve ever had… Sixteen overs of spin – to do that to a Sri Lankan team in their own country is awesome… It’s been an awesome tour.”He receives the trophy, which, as in the ODI series, is much the same size as the one for the Champions League in football

Jacob Bethell dismantles Sri Lanka tail to deliver T20 series clean sweep for England

Fans attack ‘classless’ NHL for cutting cancer donation by $800,000 after missed shot

Welcome to Team GB’s Milan base: TV, games, popcorn and 5,000 teabags

Lindsey Vonn confident she can compete at Olympics despite ‘completely ruptured’ ACL

From Bradbury to Bright: five of Australia’s best Winter Olympic moments | Martin Pegan

Figure skater saved from scrapping Olympic routine after Minions music copyright dispute