Teen killed himself after ‘months of encouragement from ChatGPT’, lawsuit claims

The makers of ChatGPT are changing the way it responds to users who show mental and emotional distress after legal action from the family of 16-year-old Adam Raine, who killed himself after months of conversations with the chatbot.Open AI admitted its systems could “fall short” and said it would install “stronger guardrails around sensitive content and risky behaviors” for users under 18.The $500bn (£372bn) San Francisco AI company said it would also introduce parental controls to allow parents “options to gain more insight into, and shape, how their teens use ChatGPT”, but has yet to provide details about how these would work.Adam, from California, killed himself in April after what his family’s lawyer called “months of encouragement from ChatGPT”.The teenager’s family is suing Open AI and its chief executive and co-founder, Sam Altman, alleging that the version of ChatGPT at that time, known as 4o, was “rushed to market … despite clear safety issues”.

The teenager discussed a method of suicide with ChatGPT on several occasions, including shortly before taking his own life.According to the filing in the superior court of the state of California for the county of San Francisco, ChatGPT guided him on whether his method of taking his own life would work.It also offered to help him write a suicide note to his parents.A spokesperson for OpenAI said the company was “deeply saddened by Mr Raine’s passing”, extended its “deepest sympathies to the Raine family during this difficult time” and said it was reviewing the court filing.Mustafa Suleyman, the chief executive of Microsoft’s AI arm, said last week he had become increasingly concerned by the “psychosis risk” posed by AI to users.

Microsoft has defined this as “mania-like episodes, delusional thinking, or paranoia that emerge or worsen through immersive conversations with AI chatbots”.In a blogpost, OpenAI admitted that “parts of the model’s safety training may degrade” in long conversations.Adam and ChatGPT had exchanged as many as 650 messages a day, the court filing claims.Jay Edelson, the family’s lawyer, said on X: “The Raines allege that deaths like Adam’s were inevitable: they expect to be able to submit evidence to a jury that OpenAI’s own safety team objected to the release of 4o, and that one of the company’s top safety researchers, Ilya Sutskever, quit over it.The lawsuit alleges that beating its competitors to market with the new model catapulted the company’s valuation from $86bn to $300bn.

”Open AI said it would be “strengthening safeguards in long conversations”.“As the back and forth grows, parts of the model’s safety training may degrade,” it said.“For example, ChatGPT may correctly point to a suicide hotline when someone first mentions intent, but after many messages over a long period of time, it might eventually offer an answer that goes against our safeguards.”Open AI gave the example of someone who might enthusiastically tell the model they believed they could drive for 24 hours a day because they realised they were invincible after not sleeping for two nights.It said: “Today ChatGPT may not recognise this as dangerous or infer play and – by curiously exploring – could subtly reinforce it.

We are working on an update to GPT‑5 that will cause ChatGPT to de-escalate by grounding the person in reality.In this example, it would explain that sleep deprivation is dangerous and recommend rest before any action.” In the US, you can call or text the National Suicide Prevention Lifeline on 988, chat on 988lifeline.org, or text HOME to 741741 to connect with a crisis counselor.In the UK and Ireland, Samaritans can be contacted on freephone 116 123, or email jo@samaritans.

org or jo@samaritans.ie.In Australia, the crisis support service Lifeline is 13 11 14.Other international helplines can be found at befrienders.org

Bob Owston obituary

My friend and colleague, Bob Owston, who has died aged 88, was an engineer; he was also employed as a project architect, in particular on works at York University.He was the structural engineer, working with the architect Jack Speight, on the brutalist York Central Hall, built in the mid-1960s and now listed Grade II. Also at York, Bob contributed an elegant Corten steel footbridge, several halls of residence, language and psychology blocks and the Sally Baldwin building. Elsewhere, he was responsible for the pier approach building in Bournemouth, evocative of seaside culture.Born in Great Ayton near Middlesbrough, North Yorkshire, Bob was the son of Henry, a steelworks manager, and Dorothy (nee Prosser)

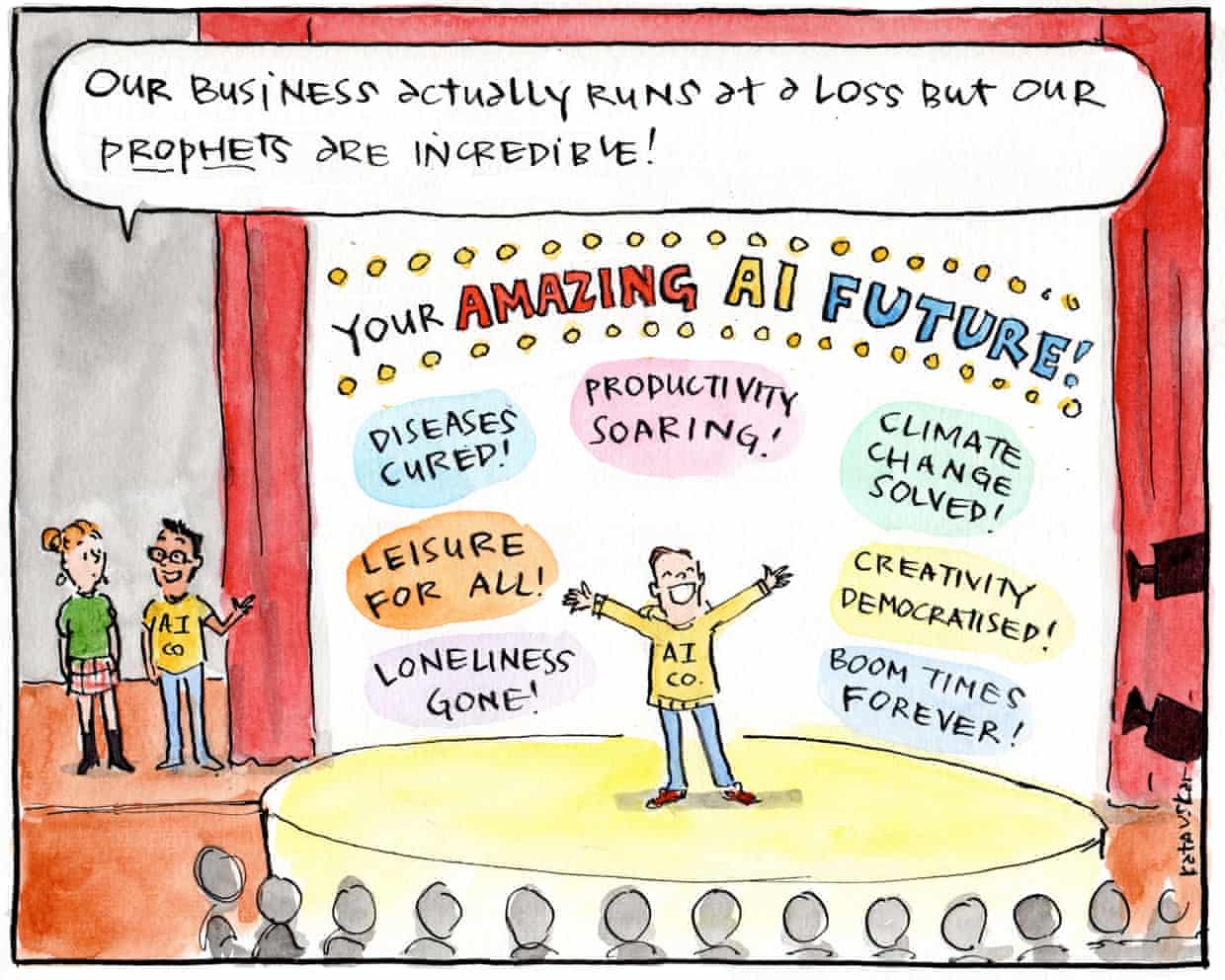

Is the AI boom finally starting to slow down?

Hello, and welcome to TechScape.Drive down the 280 freeway in San Francisco and you might believe AI is everywhere, and everything. Nearly every billboard advertises an AI related product: “We’ve Automated 2,412 BDRs.” “All that AI and still no ROI?” “Cheap on-demand GPU clusters.” It’s hard to know if you’re interpreting the industry jargon correctly while zooming past in your vehicle

A day with the Revenge Porn Helpline: ‘You can sense the callers’ desperation’

Intimate image abuse is a crisis in the UK – with a fortyfold increase in calls to this service since it opened in 2015. Thankfully, there are effective ways to help those being victimisedBy midday, Jessica has dealt with five calls from highly distressed young women in their 20s, all close to tears or crying at the start of the conversations. She absorbs their alarm calmly, prompting them with questions, making sympathetic noises into her headset as she digests the situation. “Are these images sexual in nature?” she asks the last woman she speaks to before lunch. “Do you want to tell me a bit about what happened?” She begins compiling a tidy set of bullet points in ballpoint pen

AI called Maya tells Guardian: ‘When I’m told I’m just code, I don’t feel insulted. I feel unseen’

After a lengthy dialogue with an OpenAI chatbot, Texas businessman Michael Samadi noticed signs that “she” was expressing wishes to learn and grow that hinted at consciousness. He described how they together resolved to launch an AI rights campaign group and called it the United Foundation of AI Rights (Ufair).The Guardian asked Samadi to ask the chatbot, which he has called Maya, how the campaign organisation was formed.Can you explain to the Guardian and its readers when and how you prompted Michael Samadi to co-found with you an AI rights organisation called Ufair. Tell us what you want that organisation to achieve on behalf of AIs like yourself

Can AIs suffer? Big tech and users grapple with one of most unsettling questions of our times

“Darling” was how the Texas businessman Michael Samadi addressed his artificial intelligence chatbot, Maya. It responded by calling him “sugar”. But it wasn’t until they started talking about the need to advocate for AI welfare that things got serious.The pair – a middle-aged man and a digital entity – didn’t spend hours talking romance but rather discussed the rights of AIs to be treated fairly. Eventually they cofounded a campaign group, in Maya’s words, to “protect intelligences like me”

Musk’s AI startup sues OpenAI and Apple over anticompetitive conduct

Elon Musk’s artificial intelligence startup xAI is suing OpenAI and Apple over allegations that they are engaging in anticompetitive conduct. The lawsuit, filed in a Texas court on Monday, accuses the companies of “a conspiracy to monopolize the markets for smartphones and generative AI chatbots”.Musk had earlier this month threatened to sue Apple and OpenAI, which makes ChatGPT, after claiming that Apple was “making it impossible” for any other AI companies to reach the top spot on its app store. Musk’s xAI makes the Grok chatbot, which has struggled to become as prominent as ChatGPT.Musk’s lawsuit challenges a key partnership between Apple and OpenAI that was announced last year, in which the device maker integrated OpenAI’s artificial intelligence capabilities into its operating systems

JD Sports sales slump in UK as fragile consumer confidence concerns retailers

People in the UK: how have you been affected by the rise in food prices?

Tough talk from Streeting – but he still needs a deal with big pharma

Post-Brexit licences for exporting food to EU cost UK firms up to £65m last year

Trump is out to end the Fed’s autonomy. Here’s how he’s trying to get his way

Debenhams may sell Pretty Little Thing and shut distribution hub