‘The biggest decision yet’: Jared Kaplan on allowing AI to train itself

Humanity will have to decide by 2030 whether to take the “ultimate risk” of letting artificial intelligence systems train themselves to become more powerful, one of the world’s leading AI scientists has said.Jared Kaplan, the chief scientist and co-owner of the $180bn (£135bn) US startup Anthropic, said a choice was looming about how much autonomy the systems should be given to evolve.The move could trigger a beneficial “intelligence explosion” – or be the moment humans end up losing control.In an interview about the intensely competitive race to reach artificial general intelligence (AGI) – sometimes called superintelligence – Kaplan urged international governments and society to engage in what he called “the biggest decision”.Anthropic is part of a pack of frontier AI companies including OpenAI, Google DeepMind, xAI, Meta and Chinese rivals led by DeepSeek, racing for AI dominance.

Its widely used AI assistant, Claude, has become particularly popular among business customers.Kaplan said that while efforts to align the rapidly advancing technology to human interests had to date been successful, freeing it to recursively self-improve “is in some ways the ultimate risk, because it’s kind of like letting AI kind of go”.The decision could come between 2027 and 2030, he said.“If you imagine you create this process where you have an AI that is smarter than you, or about as smart as you, it’s [then] making an AI that’s much smarter.”“It sounds like a kind of scary process.

You don’t know where you end up,”Kaplan has gone from being a theoretical physicist scientist to an AI billionaire in seven years working in the field,In a wide-ranging interview, he also said:AI systems will be capable of doing “most white-collar work” in two to three years,That his six-year-old son will never be better than an AI at academic work such as writing an essay or doing a maths exam,That it was right to worry about humans losing control of the technology if AIs start to improve themselves.

The stakes in the race to AGI feel “daunting”.The best-case scenario could enable AI to accelerate biomedical research, improve health and cybersecurity, boost productivity, give people more free time and help humans flourish.Kaplan met the Guardian at Anthropic’s headquarters in San Francisco, where the interior of knitted rugs and upbeat jazz music belies the existential concerns about the technology being developed.Kaplan is a Stanford-and Harvard-educated professor of physics who researched at Johns Hopkins University and at Cern in Switzerland before joining OpenAI in 2019 and co-founding Anthropic in 2021.He is not alone at Anthropic in voicing concerns.

One of his co-founders, Jack Clark, said in October he was both an optimist and “deeply afraid” about the trajectory of AI, which he called “a real and mysterious creature, not a simple and predictable machine”.Kaplan said he was very optimistic about the alignment of AI systems with the interests of humanity up to the level of human intelligence, but was concerned about the consequences if and when they exceed that threshold.He said: “If you imagine you create this process where you have an AI that is smarter than you, or about as smart as you, it’s [then] making an AI that’s much smarter.It’s going to enlist that AI help to make an AI smarter than that.It sounds like a kind of scary process.

You don’t know where you end up.”Doubt has been cast on the gains made from deploying AIs in the economy.Outside Anthropic’s headquarters, a billboard for another tech company pointedly asked “All that AI and no ROI?”, a reference to return on investment.A Harvard Business Review study in September said AI “workslop” – substandard AI enabled-work that humans have to fix – was reducing productivity.Some of the clearest gains have been in using AIs to write computer code.

In September, Anthropic revealed its cutting-edge AI, Claude Sonnet 4,5, a model for computer coding that can build AI agents and autonomously use computers,It maintained focus on complex multistep coding tasks for 30 hours unbroken, and Kaplan said that in some cases using AI was doubling the speed with which his firm’s programmers were able to work,But in November Anthropic said it believed a Chinese state-sponsored group had manipulated its Claude Code tool – not only to help humans launch a cyber-attack but to execute about 30 attacks itself, some of which were successful,Kaplan said allowing AIs to train the next AIs was “an extremely high-stakes decision to make”.

“That’s the thing that we view as maybe the biggest decision or scariest thing to do … once no one’s involved in the process, you don’t really know.You can start a process and say, ‘Oh, it’s going very well.It’s exactly what we expected.It’s very safe.’ But you don’t know – it’s a dynamic process.

Where does that lead?”He said if recursive self-improvement, as this process is sometimes known, was allowed in an uncontrolled way there were two risks.“One is do you lose control over it? Do you even know what the AIs are doing? The main question there is: are the AIs good for humanity? Are they helpful? Are they going to be harmless? Do they understand people? Are they going to allow people to continue to have agency over their lives and over the world?”“I think preventing power grabs, preventing misuse of the technology, is also very important.”“It seems very dangerous for it to fall into the wrong hands”The second risk is to security resulting from the self-taught AIs exceeding the human capabilities at scientific research and technological development.“It seems very dangerous for it to fall into the wrong hands,” he said.“You can imagine some person [deciding]: ‘I want this AI to just be my slave.

I want it to enact my will.’ I think preventing power grabs – preventing misuse of the technology – is also very important.”Independent research into frontier AI models, including ChatGPT, shows the length of tasks AIs can do has been doubling every seven months.The rivals racing to create super-intelligence.This was put together in collaboration with the Editorial Design team.

Read more from the series.Nick Hopkins, Rob Booth, Amy Hawkins, Dara Kerr, Dan MilmoRich Cousins, Harry Fischer, Pip Lev, Alessia AmitranoFiona Shields, Jim Hedge, Gail FletcherKaplan said he was concerned that the speed of progress meant humanity at large had not been able to get used to the technology before it leaped forward again.“I am worried about that … people like me could all be crazy, and it could all plateau,” he said.“Maybe the best AI ever is the AI that we have right now.But we really don’t think that’s the case.

We think it’s going to keep getting better.”He added: “It’s something where it’s moving very quickly and people don’t necessarily have time to absorb it or figure out what to do.”Anthropic is racing with OpenAI, Google DeepMind and xAI to develop ever more advanced AI systems in the push to AGI.Kaplan described the atmosphere in the Bay Area as “definitely very intense, both from the stakes of AI and from the competitiveness viewpoint”.“The way that we think about it is [that] everything is on this exponential trend in terms of investment, revenue, capabilities of AI, how complex the tasks [are that] AI can do,” he said.

The speed of progress means the risk of one of the racers slipping up and falling behind is great.“The stakes are high for staying on the frontier, in the sense that you fall off the exponential [curve] and very quickly you could be very far behind at least in terms of resources.”By 2030, datacentres are projected to require $6.7tn worldwide to keep pace with the demand for compute power, McKinsey has estimated.Investors want to back the companies closest to the front of the pack.

At the same time, Anthropic is known for encouraging regulation of AI,Its statement of purpose includes a section headlined: “We build safer systems,”“We don’t really want it to be a Sputnik-like situation where the government suddenly wakes up and is like, ‘Oh, wow, AI is a big deal’ … We want policymakers to be as informed as possible along the trajectory so they can take it into account,”In October, Anthropic’s position triggered a put-down from Donald Trump’s White House,David Sacks, the US president’s AI adviser, accused Anthropic of “fearmongering” to encourage state-by-state regulation that would benefit its position and damage startups.

After Sacks claimed it had positioned itself as “a foe” of the Trump administration, Dario Amodei, Kaplan’s co-founder and Anthropic’s chief executive, hit back by saying the company had publicly praised Trump’s AI action plan, worked with Republicans and that, like the White House, it wanted to maintain the US’s lead in AI.

Production of French-German fighter jet threatened by rivalries, chief executive says

The leaders of France and Germany have a “strong willingness” to build a new fighter jet together despite bitter internal rivalries, according to the chief executive of engine manufacturer Safran.A row over who should lead between French aerospace company Dassault and the German unit of Airbus has threatened to break apart the countries’ efforts to make a next-generation fighter jet.France’s Safran, one of the world’s biggest engine-makers, is due to co-produce turbines for the aircraft. Its chief executive, Olivier Andriès, told reporters in London on Tuesday that relations were “very strained” between the lead partners on the Future Combat Air System (known as Scaf in France)However, he added that the offices of the French president, Emmanuel Macron, and the German chancellor, Friedrich Merz, wanted a solution. “Obviously the relationship between Airbus and Dassault is extremely difficult,” Andriès said

Tunbridge Wells water cut likely to last after treatment problem reoccurs

Water shortages in Tunbridge Wells that have forced schools and businesses to close look likely to continue for at least another day after the local water company said the problem with its plant had reoccurred.The Drinking Water Inspectorate (DWI) has said it will investigate.Thousands of homes in the Kent town have been without water since the weekend after South East Water accidentally added the wrong chemicals to the tap water supply.Schools across the area have been shut, and residents have been filling buckets with rainwater to flush toilets. Cats, dogs and guinea pigs have been given bottled mineral water to drink as the people of Tunbridge Wells wait for their water to be switched back on

Sam Altman issues ‘code red’ at OpenAI as ChatGPT contends with rivals

Sam Altman has declared a “code red” at OpenAI to improve ChatGPT as the chatbot faces intense competition from rivals.According to a report by tech news site the Information, the chief executive of the San Francisco-based startup told staff in an internal memo: “We are at a critical time for ChatGPT.”OpenAI has been rattled by the success of Google’s latest AI model, Gemini 3, and is devoting more internal resources to improving ChatGPT.Last month, Altman told employees that the launch of Gemini 3, which has outperformed rivals on various benchmarks, could create “temporary economic headwinds” for the company. He added: “I expect the vibes out there to be rough for a bit

The fight to see clearly through big tech’s echo chambers

Hello, and welcome to TechScape. I’m your host, Blake Montgomery. Today, I’m mulling over whether to upgrade my iPhone 11 Pro. In tech news, there’s a narrative battle afoot in Silicon Valley, tips on avoiding the yearly smartphone upgrade cycle and new devices altogether, and artificial intelligence’s use in government, for better and for worse.The encroachment of technology can feel inevitable

Serena Williams quietly re-enters drug-testing pool in step toward possible 2026 return

Serena Williams has taken the procedural move required of any player contemplating a competitive comeback, after the 23-time grand slam singles champion re-entered the International Tennis Integrity Agency’s (ITIA) registered testing pool for the first time since 2022.Williams, 44, has not played an official match since her run to the third round of the US Open more than three years ago. Although she described her departure at the time as “evolving away” from the sport rather than a hard retirement, she filed the paperwork with the ITIA that September that exempted her from the sport’s stringent whereabouts requirements. To return to competition, however, players must make themselves available for out-of-competition testing for six months before they are allowed to enter an event.Williams’s name appeared on the agency’s updated testing-pool list dated 6 October

Men’s Rugby World Cup 2027: how the draw will work and the new format explained

A potential path to victory at the 2027 men’s Rugby World Cup will become clearer in Sydney on Wednesday when the draw for the tournament is madeKicking off on 1 October 2027, the men’s event will feature 24 teams instead of 20, and will be the first to include a round of 16. Each tournament since 2003 has included 20 competing nations, and the previous format moved straight from the pool stage to the quarter-finals. There will be a total of 52 matches in 2027, up from 48.Instead of four pools of five there are six pools of four. The eventual winners will still be obliged to play seven matches to claim the trophy, but will play one pool match fewer, before the last-16 game for a place in the last eight

UK ministers aim to ban cryptocurrency political donations over anonymity risks

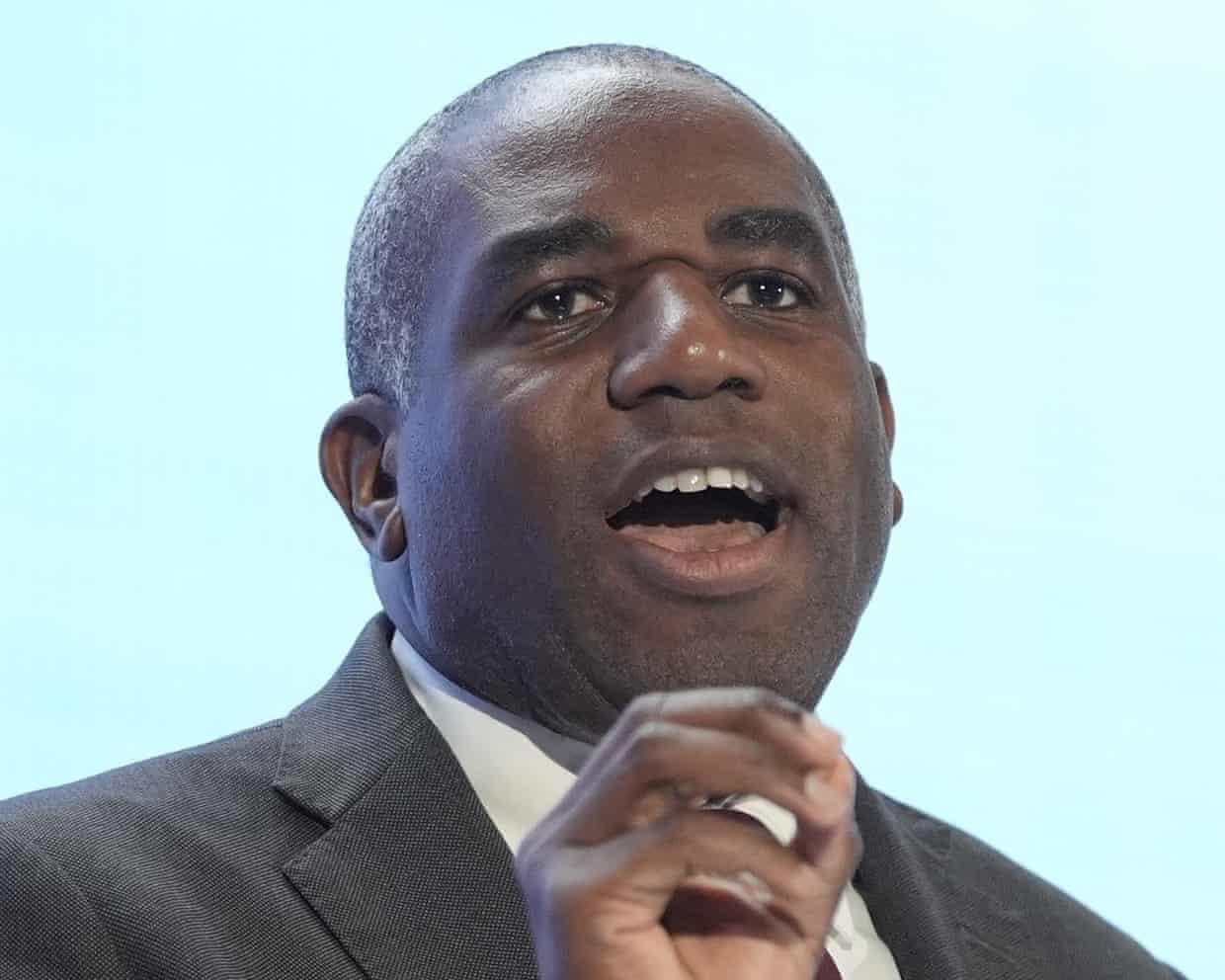

David Lammy tells of ‘traumatic’ racial abuse in youth after Farage allegations

Angela Rayner to lay amendment to speed up workers’ rights bill

UK terror watchdog warns national security plan ignores escalating online threats

Attorney general urges Nigel Farage to apologise over alleged racism and antisemitism

Starmer has little choice but to bind himself closer to his chancellor